mirror of

https://github.com/minio/minio.git

synced 2025-11-07 12:52:58 -05:00

cleanup markdown docs across multiple files (#14296)

enable markdown-linter

This commit is contained in:

@@ -1,21 +1,23 @@

|

||||

## Decommissioning

|

||||

# Decommissioning

|

||||

|

||||

Decommissiong is a mechanism in MinIO to drain older pools (usually with old hardware) and migrate the content from such pools to a newer pools (usually better hardware). Decommissioning spreads the data across all pools - for example if you decommission `pool1`, all the data from `pool1` shall be spread across `pool2` and `pool3` respectively.

|

||||

|

||||

### Features

|

||||

## Features

|

||||

|

||||

- A pool in decommission still allows READ access to all its contents, newer WRITEs will be automatically scheduled to only new pools.

|

||||

- All versioned buckets maintain the same order for "versions" for each objects after being decommissioned to the newer pools.

|

||||

- A pool in decommission resumes from where it was left off (for example - in-case of cluster restarts or restarts attempted after a failed decommission attempt).

|

||||

|

||||

### How to decommission a pool?

|

||||

## How to decommission a pool?

|

||||

|

||||

```

|

||||

λ mc admin decommission start alias/ http://minio{1...2}/data{1...4}

|

||||

```

|

||||

|

||||

### Status decommissioning a pool

|

||||

## Status decommissioning a pool

|

||||

|

||||

### Decommissioning without args lists all pools

|

||||

|

||||

#### Decommissioning without args lists all pools

|

||||

```

|

||||

λ mc admin decommission status alias/

|

||||

┌─────┬─────────────────────────────────┬──────────────────────────────────┬────────┐

|

||||

@@ -25,7 +27,8 @@ Decommissiong is a mechanism in MinIO to drain older pools (usually with old har

|

||||

└─────┴─────────────────────────────────┴──────────────────────────────────┴────────┘

|

||||

```

|

||||

|

||||

#### Decommissioning status

|

||||

### Decommissioning status

|

||||

|

||||

```

|

||||

λ mc admin decommission status alias/ http://minio{1...2}/data{1...4}

|

||||

Decommissioning rate at 36 MiB/sec [4 TiB/50 TiB]

|

||||

@@ -39,13 +42,15 @@ Once it is **Complete**

|

||||

Decommission of pool http://minio{1...2}/data{1...4} is complete, you may now remove it from server command line

|

||||

```

|

||||

|

||||

#### A pool not under decommissioning will throw an error

|

||||

### A pool not under decommissioning will throw an error

|

||||

|

||||

```

|

||||

λ mc admin decommission status alias/ http://minio{1...2}/data{1...4}

|

||||

ERROR: This pool is not scheduled for decommissioning currently.

|

||||

```

|

||||

|

||||

### Canceling a decommission?

|

||||

## Canceling a decommission?

|

||||

|

||||

Stop an on-going decommission in progress, mainly used in situations when the load may be too high and you may want to schedule the decommission at a later point in time.

|

||||

|

||||

`mc admin decommission cancel` without an argument, lists out any on-going decommission in progress.

|

||||

@@ -69,6 +74,7 @@ Stop an on-going decommission in progress, mainly used in situations when the lo

|

||||

```

|

||||

|

||||

If for some reason decommission fails in between, the `status` will indicate decommission as failed instead.

|

||||

|

||||

```

|

||||

λ mc admin decommission status alias/

|

||||

┌─────┬─────────────────────────────────┬──────────────────────────────────┬──────────────────┐

|

||||

@@ -78,14 +84,16 @@ If for some reason decommission fails in between, the `status` will indicate dec

|

||||

└─────┴─────────────────────────────────┴──────────────────────────────────┴──────────────────┘

|

||||

```

|

||||

|

||||

### Restart a canceled or failed decommission?

|

||||

## Restart a canceled or failed decommission?

|

||||

|

||||

```

|

||||

λ mc admin decommission start alias/ http://minio{1...2}/data{1...4}

|

||||

```

|

||||

|

||||

### When decommission is 'Complete'?

|

||||

## When decommission is 'Complete'?

|

||||

|

||||

Once decommission is complete, it will be indicated with *Complete* status. *Complete* means that now you can now safely remove the first pool argument from the MinIO command line.

|

||||

|

||||

```

|

||||

λ mc admin decommission status alias/

|

||||

┌─────┬─────────────────────────────────┬──────────────────────────────────┬──────────┐

|

||||

@@ -103,10 +111,12 @@ Once decommission is complete, it will be indicated with *Complete* status. *Co

|

||||

|

||||

> Without a 'Complete' status any 'Active' or 'Draining' pool(s) are not allowed to be removed once configured.

|

||||

|

||||

### NOTE

|

||||

## NOTE

|

||||

|

||||

- Empty delete marker's i.e objects with no other successor versions are not transitioned to the new pool, to avoid any empty metadata being recreated on the newer pool. We do not think this is needed, please open a GitHub issue if you think otherwise.

|

||||

|

||||

### TODO

|

||||

## TODO

|

||||

|

||||

- Richer progress UI is not present at the moment, this will be addressed in subsequent releases. Currently however a RATE of data transfer and usage increase is displayed via `mc`.

|

||||

- Transitioned Hot Tier's as pooled setups are not currently supported, attempting to decommission buckets with ILM Transition will be rejected by the server. This will be supported in future releases.

|

||||

- Embedded Console UI does not support Decommissioning through the UI yet. This will be supported in future releases.

|

||||

|

||||

@@ -1,7 +1,9 @@

|

||||

# Distributed Server Design Guide [](https://slack.min.io)

|

||||

|

||||

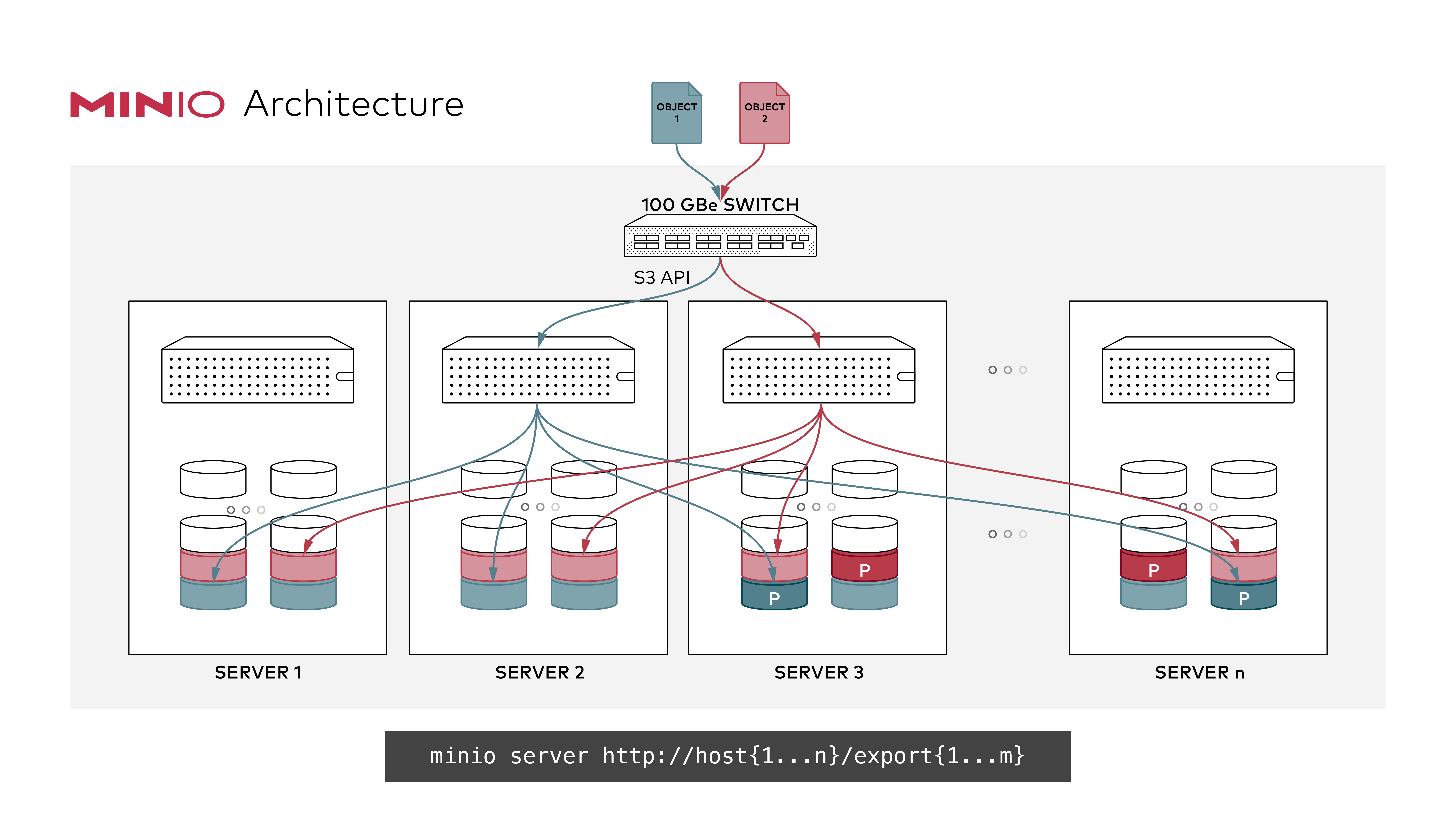

This document explains the design, architecture and advanced use cases of the MinIO distributed server.

|

||||

|

||||

## Command-line

|

||||

|

||||

```

|

||||

NAME:

|

||||

minio server - start object storage server

|

||||

@@ -22,6 +24,7 @@ DIR:

|

||||

## Common usage

|

||||

|

||||

Standalone erasure coded configuration with 4 sets with 16 disks each.

|

||||

|

||||

```

|

||||

minio server dir{1...64}

|

||||

```

|

||||

@@ -44,7 +47,7 @@ Expansion of ellipses and choice of erasure sets based on this expansion is an a

|

||||

|

||||

- Choice of erasure set size is automatic based on the number of disks available, let's say for example if there are 32 servers and 32 disks which is a total of 1024 disks. In this scenario 16 becomes the erasure set size. This is decided based on the greatest common divisor (GCD) of acceptable erasure set sizes ranging from *4 to 16*.

|

||||

|

||||

- *If total disks has many common divisors the algorithm chooses the minimum amounts of erasure sets possible for a erasure set size of any N*. In the example with 1024 disks - 4, 8, 16 are GCD factors. With 16 disks we get a total of 64 possible sets, with 8 disks we get a total of 128 possible sets, with 4 disks we get a total of 256 possible sets. So algorithm automatically chooses 64 sets, which is *16 * 64 = 1024* disks in total.

|

||||

- *If total disks has many common divisors the algorithm chooses the minimum amounts of erasure sets possible for a erasure set size of any N*. In the example with 1024 disks - 4, 8, 16 are GCD factors. With 16 disks we get a total of 64 possible sets, with 8 disks we get a total of 128 possible sets, with 4 disks we get a total of 256 possible sets. So algorithm automatically chooses 64 sets, which is *16* 64 = 1024* disks in total.

|

||||

|

||||

- *If total number of nodes are of odd number then GCD algorithm provides affinity towards odd number erasure sets to provide for uniform distribution across nodes*. This is to ensure that same number of disks are pariticipating in any erasure set. For example if you have 2 nodes with 180 drives then GCD is 15 but this would lead to uneven distribution, one of the nodes would participate more drives. To avoid this the affinity is given towards nodes which leads to next best GCD factor of 12 which provides uniform distribution.

|

||||

|

||||

@@ -55,6 +58,7 @@ minio server http://host{1...2}/export{1...8}

|

||||

```

|

||||

|

||||

Expected expansion

|

||||

|

||||

```

|

||||

> http://host1/export1

|

||||

> http://host2/export1

|

||||

@@ -77,6 +81,7 @@ Expected expansion

|

||||

*A noticeable trait of this expansion is that it chooses unique hosts such the setup provides maximum protection and availability.*

|

||||

|

||||

- Choosing an erasure set for the object is decided during `PutObject()`, object names are used to find the right erasure set using the following pseudo code.

|

||||

|

||||

```go

|

||||

// hashes the key returning an integer.

|

||||

func sipHashMod(key string, cardinality int, id [16]byte) int {

|

||||

@@ -88,6 +93,7 @@ func sipHashMod(key string, cardinality int, id [16]byte) int {

|

||||

return int(sip.Sum64() % uint64(cardinality))

|

||||

}

|

||||

```

|

||||

|

||||

Input for the key is the object name specified in `PutObject()`, returns a unique index. This index is one of the erasure sets where the object will reside. This function is a consistent hash for a given object name i.e for a given object name the index returned is always the same.

|

||||

|

||||

- Write and Read quorum are required to be satisfied only across the erasure set for an object. Healing is also done per object within the erasure set which contains the object.

|

||||

@@ -96,7 +102,7 @@ Input for the key is the object name specified in `PutObject()`, returns a uniqu

|

||||

|

||||

- MinIO also supports expansion of existing clusters in server pools. Each pool is a self contained entity with same SLA's (read/write quorum) for each object as original cluster. By using the existing namespace for lookup validation MinIO ensures conflicting objects are not created. When no such object exists then MinIO simply uses the least used pool to place new objects.

|

||||

|

||||

__There are no limits on how many server pools can be combined__

|

||||

### There are no limits on how many server pools can be combined

|

||||

|

||||

```

|

||||

minio server http://host{1...32}/export{1...32} http://host{1...12}/export{1...12}

|

||||

@@ -112,6 +118,7 @@ In above example there are two server pools

|

||||

Refer to the sizing guide with details on the default parity count chosen for different erasure stripe sizes [here](https://github.com/minio/minio/blob/master/docs/distributed/SIZING.md)

|

||||

|

||||

MinIO places new objects in server pools based on proportionate free space, per pool. Following pseudo code demonstrates this behavior.

|

||||

|

||||

```go

|

||||

func getAvailablePoolIdx(ctx context.Context) int {

|

||||

serverPools := z.getServerPoolsAvailableSpace(ctx)

|

||||

@@ -135,21 +142,25 @@ func getAvailablePoolIdx(ctx context.Context) int {

|

||||

### Advanced use cases with multiple ellipses

|

||||

|

||||

Standalone erasure coded configuration with 4 sets with 16 disks each, which spawns disks across controllers.

|

||||

|

||||

```

|

||||

minio server /mnt/controller{1...4}/data{1...16}

|

||||

```

|

||||

|

||||

Standalone erasure coded configuration with 16 sets, 16 disks per set, across mounts and controllers.

|

||||

|

||||

```

|

||||

minio server /mnt{1...4}/controller{1...4}/data{1...16}

|

||||

```

|

||||

|

||||

Distributed erasure coded configuration with 2 sets, 16 disks per set across hosts.

|

||||

|

||||

```

|

||||

minio server http://host{1...32}/disk1

|

||||

```

|

||||

|

||||

Distributed erasure coded configuration with rack level redundancy 32 sets in total, 16 disks per set.

|

||||

|

||||

```

|

||||

minio server http://rack{1...4}-host{1...8}.example.net/export{1...16}

|

||||

```

|

||||

|

||||

@@ -38,24 +38,24 @@ Install MinIO - [MinIO Quickstart Guide](https://docs.min.io/docs/minio-quicksta

|

||||

|

||||

To start a distributed MinIO instance, you just need to pass drive locations as parameters to the minio server command. Then, you’ll need to run the same command on all the participating nodes.

|

||||

|

||||

__NOTE:__

|

||||

**NOTE:**

|

||||

|

||||

- All the nodes running distributed MinIO should share a common root credentials, for the nodes to connect and trust each other. To achieve this, it is __recommended__ to export root user and root password as environment variables, `MINIO_ROOT_USER` and `MINIO_ROOT_PASSWORD`, on all the nodes before executing MinIO server command. If not exported, default `minioadmin/minioadmin` credentials shall be used.

|

||||

- __MinIO creates erasure-coding sets of *4* to *16* drives per set. The number of drives you provide in total must be a multiple of one of those numbers.__

|

||||

- __MinIO chooses the largest EC set size which divides into the total number of drives or total number of nodes given - making sure to keep the uniform distribution i.e each node participates equal number of drives per set__.

|

||||

- __Each object is written to a single EC set, and therefore is spread over no more than 16 drives.__

|

||||

- __All the nodes running distributed MinIO setup are recommended to be homogeneous, i.e. same operating system, same number of disks and same network interconnects.__

|

||||

- MinIO distributed mode requires __fresh directories__. If required, the drives can be shared with other applications. You can do this by using a sub-directory exclusive to MinIO. For example, if you have mounted your volume under `/export`, pass `/export/data` as arguments to MinIO server.

|

||||

- All the nodes running distributed MinIO should share a common root credentials, for the nodes to connect and trust each other. To achieve this, it is **recommended** to export root user and root password as environment variables, `MINIO_ROOT_USER` and `MINIO_ROOT_PASSWORD`, on all the nodes before executing MinIO server command. If not exported, default `minioadmin/minioadmin` credentials shall be used.

|

||||

- **MinIO creates erasure-coding sets of _4_ to _16_ drives per set. The number of drives you provide in total must be a multiple of one of those numbers.**

|

||||

- **MinIO chooses the largest EC set size which divides into the total number of drives or total number of nodes given - making sure to keep the uniform distribution i.e each node participates equal number of drives per set**.

|

||||

- **Each object is written to a single EC set, and therefore is spread over no more than 16 drives.**

|

||||

- **All the nodes running distributed MinIO setup are recommended to be homogeneous, i.e. same operating system, same number of disks and same network interconnects.**

|

||||

- MinIO distributed mode requires **fresh directories**. If required, the drives can be shared with other applications. You can do this by using a sub-directory exclusive to MinIO. For example, if you have mounted your volume under `/export`, pass `/export/data` as arguments to MinIO server.

|

||||

- The IP addresses and drive paths below are for demonstration purposes only, you need to replace these with the actual IP addresses and drive paths/folders.

|

||||

- Servers running distributed MinIO instances should be less than 15 minutes apart. You can enable [NTP](http://www.ntp.org/) service as a best practice to ensure same times across servers.

|

||||

- `MINIO_DOMAIN` environment variable should be defined and exported for bucket DNS style support.

|

||||

- Running Distributed MinIO on __Windows__ operating system is considered **experimental**. Please proceed with caution.

|

||||

- Running Distributed MinIO on **Windows** operating system is considered **experimental**. Please proceed with caution.

|

||||

|

||||

Example 1: Start distributed MinIO instance on n nodes with m drives each mounted at `/export1` to `/exportm` (pictured below), by running this command on all the n nodes:

|

||||

|

||||

|

||||

|

||||

#### GNU/Linux and macOS

|

||||

### GNU/Linux and macOS

|

||||

|

||||

```sh

|

||||

export MINIO_ROOT_USER=<ACCESS_KEY>

|

||||

@@ -63,11 +63,11 @@ export MINIO_ROOT_PASSWORD=<SECRET_KEY>

|

||||

minio server http://host{1...n}/export{1...m}

|

||||

```

|

||||

|

||||

> __NOTE:__ In above example `n` and `m` represent positive integers, *do not copy paste and expect it work make the changes according to local deployment and setup*.

|

||||

> **NOTE:** In above example `n` and `m` represent positive integers, _do not copy paste and expect it work make the changes according to local deployment and setup_.

|

||||

> **NOTE:** `{1...n}` shown have 3 dots! Using only 2 dots `{1..n}` will be interpreted by your shell and won't be passed to MinIO server, affecting the erasure coding order, which would impact performance and high availability. **Always use ellipses syntax `{1...n}` (3 dots!) for optimal erasure-code distribution**

|

||||

|

||||

> __NOTE:__ `{1...n}` shown have 3 dots! Using only 2 dots `{1..n}` will be interpreted by your shell and won't be passed to MinIO server, affecting the erasure coding order, which would impact performance and high availability. __Always use ellipses syntax `{1...n}` (3 dots!) for optimal erasure-code distribution__

|

||||

### Expanding existing distributed setup

|

||||

|

||||

#### Expanding existing distributed setup

|

||||

MinIO supports expanding distributed erasure coded clusters by specifying new set of clusters on the command-line as shown below:

|

||||

|

||||

```sh

|

||||

@@ -77,18 +77,21 @@ minio server http://host{1...n}/export{1...m} http://host{o...z}/export{1...m}

|

||||

```

|

||||

|

||||

For example:

|

||||

|

||||

```

|

||||

minio server http://host{1...4}/export{1...16} http://host{5...12}/export{1...16}

|

||||

```

|

||||

|

||||

Now the server has expanded total storage by _(newly_added_servers\*m)_ more disks, taking the total count to _(existing_servers\*m)+(newly_added_servers\*m)_ disks. New object upload requests automatically start using the least used cluster. This expansion strategy works endlessly, so you can perpetually expand your clusters as needed. When you restart, it is immediate and non-disruptive to the applications. Each group of servers in the command-line is called a pool. There are 2 server pools in this example. New objects are placed in server pools in proportion to the amount of free space in each pool. Within each pool, the location of the erasure-set of drives is determined based on a deterministic hashing algorithm.

|

||||

|

||||

> __NOTE:__ __Each pool you add must have the same erasure coding parity configuration as the original pool, so the same data redundancy SLA is maintained.__

|

||||

> **NOTE:** **Each pool you add must have the same erasure coding parity configuration as the original pool, so the same data redundancy SLA is maintained.**

|

||||

|

||||

## 3. Test your setup

|

||||

|

||||

To test this setup, access the MinIO server via browser or [`mc`](https://docs.min.io/docs/minio-client-quickstart-guide).

|

||||

|

||||

## Explore Further

|

||||

|

||||

- [MinIO Erasure Code QuickStart Guide](https://docs.min.io/docs/minio-erasure-code-quickstart-guide)

|

||||

- [Use `mc` with MinIO Server](https://docs.min.io/docs/minio-client-quickstart-guide)

|

||||

- [Use `aws-cli` with MinIO Server](https://docs.min.io/docs/aws-cli-with-minio)

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

## Erasure code sizing guide

|

||||

# Erasure code sizing guide

|

||||

|

||||

### Toy Setups

|

||||

## Toy Setups

|

||||

|

||||

Capacity constrained environments, MinIO will work but not recommended for production.

|

||||

|

||||

@@ -13,7 +13,7 @@ Capacity constrained environments, MinIO will work but not recommended for produ

|

||||

| 6 | 1 | 6 | 3 | 3 | 2 |

|

||||

| 7 | 1 | 7 | 3 | 3 | 3 |

|

||||

|

||||

### Minimum System Configuration for Production

|

||||

## Minimum System Configuration for Production

|

||||

|

||||

| servers | drives (per node) | stripe_size | parity chosen (default) | tolerance for reads (servers) | tolerance for writes (servers) |

|

||||

|--------:|------------------:|------------:|------------------------:|------------------------------:|-------------------------------:|

|

||||

@@ -32,7 +32,7 @@ Capacity constrained environments, MinIO will work but not recommended for produ

|

||||

| 15 | 2 | 15 | 4 | 4 | 4 |

|

||||

| 16 | 2 | 16 | 4 | 4 | 4 |

|

||||

|

||||

If one or more disks are offline at the start of a PutObject or NewMultipartUpload operation the object will have additional data

|

||||

If one or more disks are offline at the start of a PutObject or NewMultipartUpload operation the object will have additional data

|

||||

protection bits added automatically to provide the regular safety for these objects up to 50% of the number of disks.

|

||||

This will allow normal write operations to take place on systems that exceed the write tolerance.

|

||||

|

||||

|

||||

Reference in New Issue

Block a user