mirror of

https://github.com/minio/minio.git

synced 2025-11-07 21:02:58 -05:00

doc: Merge large bucket with distributed docs (#7761)

This commit is contained in:

committed by

kannappanr

kannappanr

parent

d90d4841b8

commit

a075015293

@@ -14,23 +14,23 @@ Distributed MinIO provides protection against multiple node/drive failures and [

|

||||

|

||||

A stand-alone MinIO server would go down if the server hosting the disks goes offline. In contrast, a distributed MinIO setup with _n_ disks will have your data safe as long as _n/2_ or more disks are online. You'll need a minimum of _(n/2 + 1)_ [Quorum](https://github.com/minio/dsync#lock-process) disks to create new objects though.

|

||||

|

||||

For example, an 8-node distributed MinIO setup with 1 disk per node would continue serving files, even if up to 4 disks are offline. But, you'll need at least 5 disks online to create new objects.

|

||||

For example, an 16-node distributed MinIO setup with 16 disks per node would continue serving files, even if up to 8 servers are offline. But, you'll need at least 9 servers online to create new objects.

|

||||

|

||||

### Limits

|

||||

|

||||

As with MinIO in stand-alone mode, distributed MinIO has a per tenant limit of minimum 2 and maximum 32 servers. There are no limits on number of disks shared across these servers. If you need a multiple tenant setup, you can easily spin up multiple MinIO instances managed by orchestration tools like Kubernetes.

|

||||

As with MinIO in stand-alone mode, distributed MinIO has a per tenant limit of minimum of 2 and maximum of 32 servers. There are no limits on number of disks across these servers. If you need a multiple tenant setup, you can easily spin up multiple MinIO instances managed by orchestration tools like Kubernetes, Docker Swarm etc.

|

||||

|

||||

Note that with distributed MinIO you can play around with the number of nodes and drives as long as the limits are adhered to. For example, you can have 2 nodes with 4 drives each, 4 nodes with 4 drives each, 8 nodes with 2 drives each, 32 servers with 24 drives each and so on.

|

||||

Note that with distributed MinIO you can play around with the number of nodes and drives as long as the limits are adhered to. For example, you can have 2 nodes with 4 drives each, 4 nodes with 4 drives each, 8 nodes with 2 drives each, 32 servers with 64 drives each and so on.

|

||||

|

||||

You can also use [storage classes](https://github.com/minio/minio/tree/master/docs/erasure/storage-class) to set custom data and parity distribution across total disks.

|

||||

You can also use [storage classes](https://github.com/minio/minio/tree/master/docs/erasure/storage-class) to set custom data and parity distribution per object.

|

||||

|

||||

### Consistency Guarantees

|

||||

|

||||

MinIO follows strict **read-after-write** consistency model for all i/o operations both in distributed and standalone modes.

|

||||

MinIO follows strict **read-after-write** and **list-after-write** consistency model for all i/o operations both in distributed and standalone modes.

|

||||

|

||||

# Get started

|

||||

|

||||

If you're aware of stand-alone MinIO set up, the process remains largely the same, as the MinIO server automatically switches to stand-alone or distributed mode, depending on the command line parameters.

|

||||

If you're aware of stand-alone MinIO set up, the process remains largely the same. MinIO server automatically switches to stand-alone or distributed mode, depending on the command line parameters.

|

||||

|

||||

## 1. Prerequisites

|

||||

|

||||

@@ -43,25 +43,25 @@ To start a distributed MinIO instance, you just need to pass drive locations as

|

||||

*Note*

|

||||

|

||||

- All the nodes running distributed MinIO need to have same access key and secret key for the nodes to connect. To achieve this, it is **mandatory** to export access key and secret key as environment variables, `MINIO_ACCESS_KEY` and `MINIO_SECRET_KEY`, on all the nodes before executing MinIO server command.

|

||||

- All the nodes running distributed MinIO need to be in a homogeneous environment, i.e. same operating system, same number of disks and same interconnects.

|

||||

- `MINIO_DOMAIN` environment variable should be defined and exported if domain is needed to be set.

|

||||

- MinIO distributed mode requires fresh directories. If required, the drives can be shared with other applications. You can do this by using a sub-directory exclusive to minio. For example, if you have mounted your volume under `/export`, pass `/export/data` as arguments to MinIO server.

|

||||

- All the nodes running distributed MinIO setup are recommended to be in homogeneous environment, i.e. same operating system, same number of disks and same network interconnects.

|

||||

- MinIO distributed mode requires fresh directories. If required, the drives can be shared with other applications. You can do this by using a sub-directory exclusive to MinIO. For example, if you have mounted your volume under `/export`, pass `/export/data` as arguments to MinIO server.

|

||||

- The IP addresses and drive paths below are for demonstration purposes only, you need to replace these with the actual IP addresses and drive paths/folders.

|

||||

- Servers running distributed MinIO instances should be less than 3 seconds apart. You can use [NTP](http://www.ntp.org/) as a best practice to ensure consistent times across servers.

|

||||

- Running Distributed MinIO on Windows is experimental as of now. Please proceed with caution.

|

||||

- Servers running distributed MinIO instances should be less than 15 minutes apart. You can enable [NTP](http://www.ntp.org/) service as a best practice to ensure same times across servers.

|

||||

- Running Distributed MinIO on Windows operating system is experimental. Please proceed with caution.

|

||||

- `MINIO_DOMAIN` environment variable should be defined and exported if domain is needed to be set.

|

||||

|

||||

Example 1: Start distributed MinIO instance on 32 nodes with 32 drives each mounted at `/export1` to `/export32` (pictured below), by running this command on all the 32 nodes:

|

||||

|

||||

|

||||

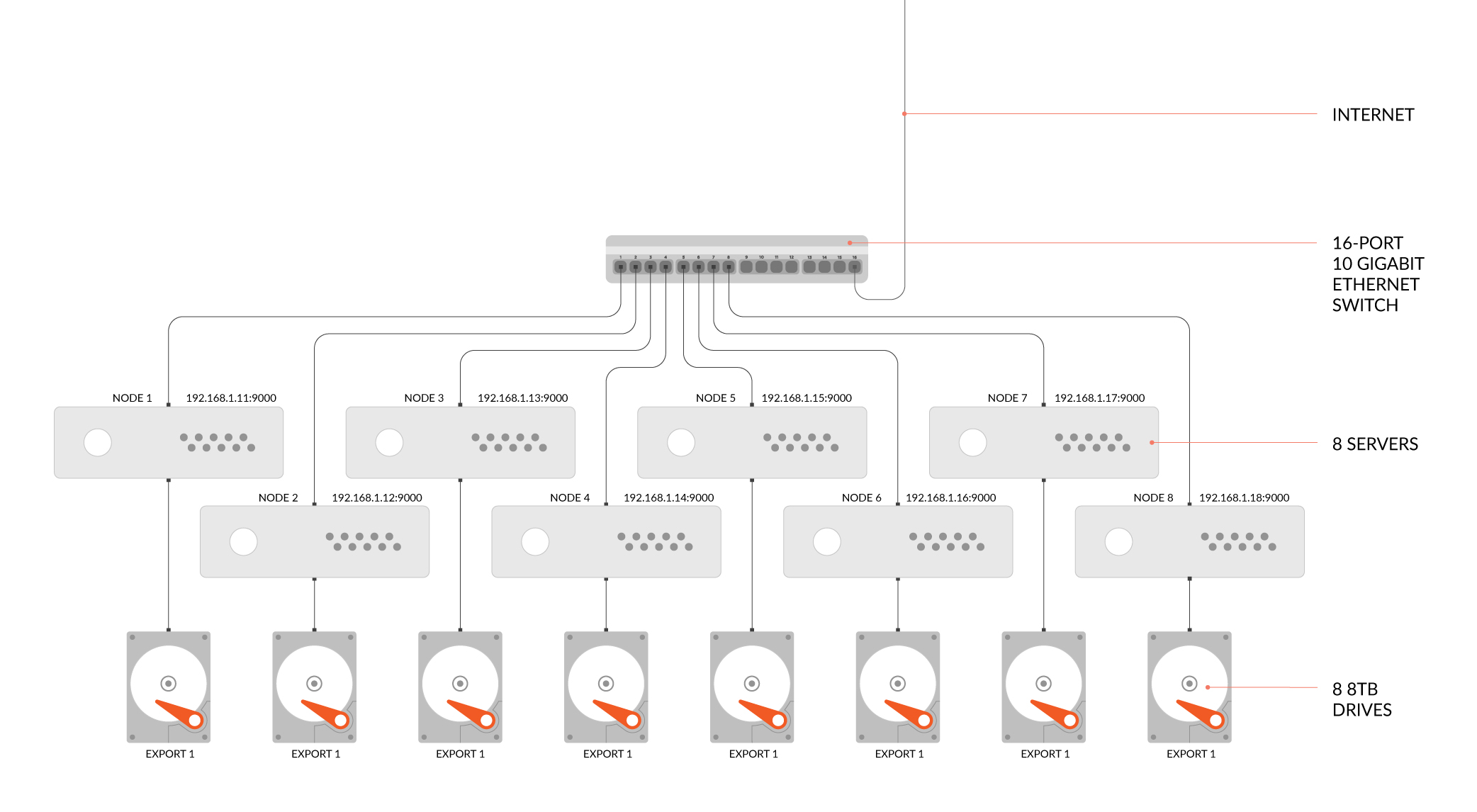

Example 1: Start distributed MinIO instance on 8 nodes with 1 disk each mounted at `/export1` (pictured below), by running this command on all the 8 nodes:

|

||||

|

||||

#### GNU/Linux and macOS

|

||||

|

||||

```sh

|

||||

export MINIO_ACCESS_KEY=<ACCESS_KEY>

|

||||

export MINIO_SECRET_KEY=<SECRET_KEY>

|

||||

minio server http://192.168.1.1{1...8}/export1

|

||||

minio server http://host{1...32}/export{1...32}

|

||||

```

|

||||

|

||||

|

||||

__NOTE:__ `{1...n}` shown have 3 dots! Using only 2 dots `{1..4}` will be interpreted by your shell and won't be passed to minio server, affecting the erasure coding order, which may impact performance and high availability. __Always use `{1...n}` (3 dots!) to allow minio server to optimally erasure-code data__

|

||||

__NOTE:__ `{1...n}` shown have 3 dots! Using only 2 dots `{1..32}` will be interpreted by your shell and won't be passed to minio server, affecting the erasure coding order, which may impact performance and high availability. __Always use ellipses syntax `{1...n}` (3 dots!) for optimal erasure-code distribution__

|

||||

|

||||

## 3. Test your setup

|

||||

To test this setup, access the MinIO server via browser or [`mc`](https://docs.min.io/docs/minio-client-quickstart-guide).

|

||||

|

||||

Reference in New Issue

Block a user