mirror of

https://github.com/minio/minio.git

synced 2025-11-09 21:49:46 -05:00

@@ -11,5 +11,3 @@ MinIO Gateway adds Amazon S3 compatibility layer to third party NAS and Cloud St

|

||||

- [NAS](https://github.com/minio/minio/blob/master/docs/gateway/nas.md)

|

||||

- [S3](https://github.com/minio/minio/blob/master/docs/gateway/s3.md)

|

||||

- [Microsoft Azure Blob Storage](https://github.com/minio/minio/blob/master/docs/gateway/azure.md)

|

||||

- [Google Cloud Storage](https://github.com/minio/minio/blob/master/docs/gateway/gcs.md)

|

||||

- [HDFS](https://github.com/minio/minio/blob/master/docs/gateway/hdfs.md)

|

||||

|

||||

@@ -1,97 +0,0 @@

|

||||

# MinIO GCS Gateway [](https://slack.min.io)

|

||||

|

||||

MinIO GCS Gateway allows you to access Google Cloud Storage (GCS) with Amazon S3-compatible APIs

|

||||

|

||||

## Support

|

||||

|

||||

Gateway implementations are frozen and are not accepting any new features. Please reports any bugs at <https://github.com/minio/minio/issues> . If you are an existing customer please login to <https://subnet.min.io> for production support.

|

||||

|

||||

## 1. Run MinIO Gateway for GCS

|

||||

|

||||

### 1.1 Create a Service Account key for GCS and get the Credentials File

|

||||

|

||||

1. Navigate to the [API Console Credentials page](https://console.developers.google.com/project/_/apis/credentials).

|

||||

2. Select a project or create a new project. Note the project ID.

|

||||

3. Select the **Create credentials** dropdown on the **Credentials** page, and click **Service account key**.

|

||||

4. Select **New service account** from the **Service account** dropdown.

|

||||

5. Populate the **Service account name** and **Service account ID**.

|

||||

6. Click the dropdown for the **Role** and choose **Storage** > **Storage Admin** *(Full control of GCS resources)*.

|

||||

7. Click the **Create** button to download a credentials file and rename it to `credentials.json`.

|

||||

|

||||

**Note:** For alternate ways to set up *Application Default Credentials*, see [Setting Up Authentication for Server to Server Production Applications](https://developers.google.com/identity/protocols/application-default-credentials).

|

||||

|

||||

### 1.2 Run MinIO GCS Gateway Using Docker

|

||||

|

||||

```sh

|

||||

podman run \

|

||||

-p 9000:9000 \

|

||||

-p 9001:9001 \

|

||||

--name gcs-s3 \

|

||||

-v /path/to/credentials.json:/credentials.json \

|

||||

-e "GOOGLE_APPLICATION_CREDENTIALS=/credentials.json" \

|

||||

-e "MINIO_ROOT_USER=minioaccountname" \

|

||||

-e "MINIO_ROOT_PASSWORD=minioaccountkey" \

|

||||

quay.io/minio/minio gateway gcs yourprojectid --console-address ":9001"

|

||||

```

|

||||

|

||||

### 1.3 Run MinIO GCS Gateway Using the MinIO Binary

|

||||

|

||||

```sh

|

||||

export GOOGLE_APPLICATION_CREDENTIALS=/path/to/credentials.json

|

||||

export MINIO_ROOT_USER=minioaccesskey

|

||||

export MINIO_ROOT_PASSWORD=miniosecretkey

|

||||

minio gateway gcs yourprojectid

|

||||

```

|

||||

|

||||

## 2. Test Using MinIO Console

|

||||

|

||||

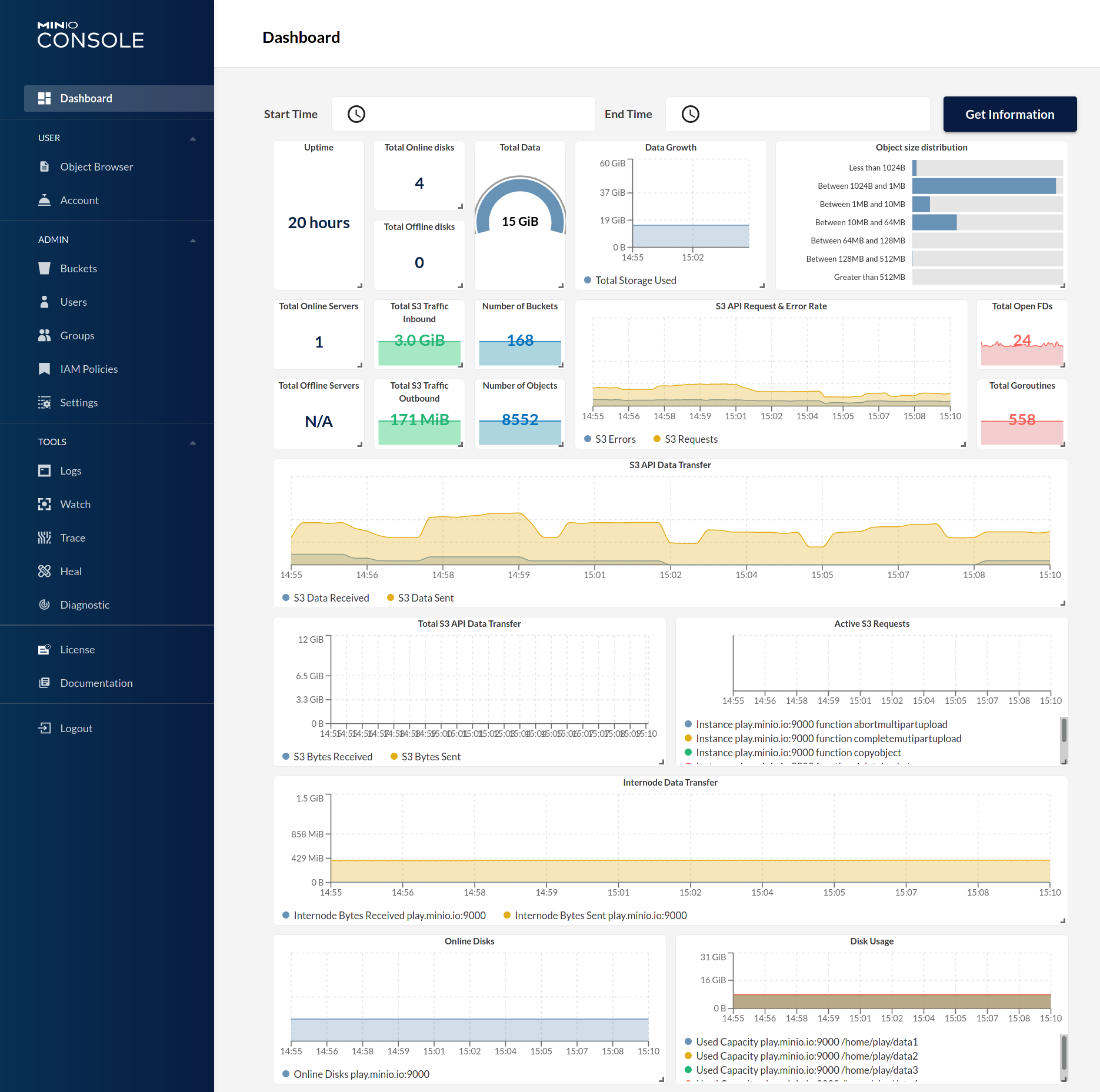

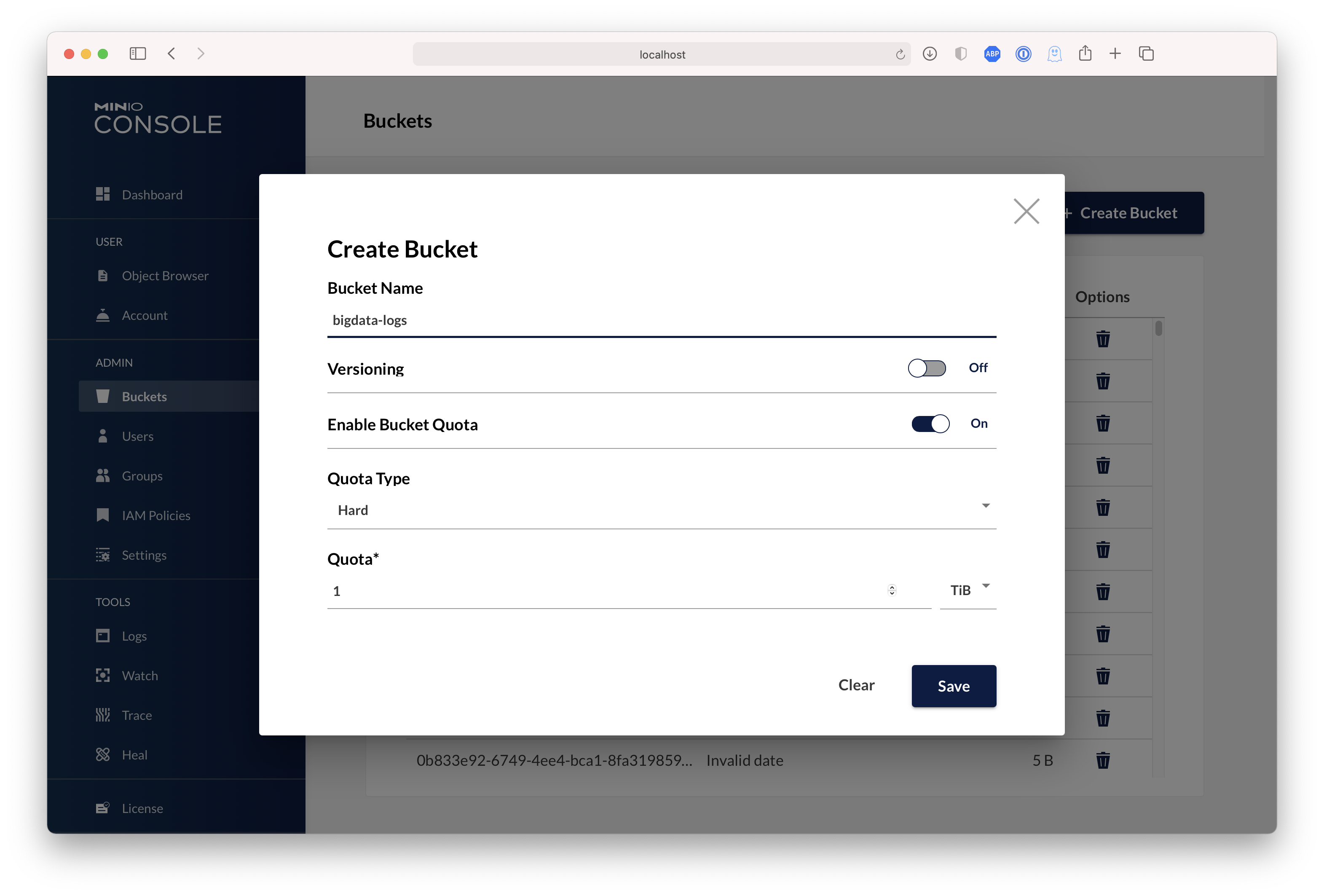

MinIO Gateway comes with an embedded web-based object browser that outputs content to <http://127.0.0.1:9000>. To test that MinIO Gateway is running, open a web browser, navigate to <http://127.0.0.1:9000>, and ensure that the object browser is displayed.

|

||||

|

||||

| Dashboard | Creating a bucket |

|

||||

| ------------- | ------------- |

|

||||

|  |  |

|

||||

|

||||

## 3. Test Using MinIO Client

|

||||

|

||||

MinIO Client is a command-line tool called `mc` that provides UNIX-like commands for interacting with the server (e.g. ls, cat, cp, mirror, diff, find, etc.). `mc` supports file systems and Amazon S3-compatible cloud storage services (AWS Signature v2 and v4).

|

||||

|

||||

### 3.1 Configure the Gateway using MinIO Client

|

||||

|

||||

Use the following command to configure the gateway:

|

||||

|

||||

```sh

|

||||

mc alias set mygcs http://gateway-ip:9000 minioaccesskey miniosecretkey

|

||||

```

|

||||

|

||||

### 3.2 List Containers on GCS

|

||||

|

||||

Use the following command to list the containers on GCS:

|

||||

|

||||

```sh

|

||||

mc ls mygcs

|

||||

```

|

||||

|

||||

A response similar to this one should be displayed:

|

||||

|

||||

```

|

||||

[2017-02-22 01:50:43 PST] 0B ferenginar/

|

||||

[2017-02-26 21:43:51 PST] 0B my-container/

|

||||

[2017-02-26 22:10:11 PST] 0B test-container1/

|

||||

```

|

||||

|

||||

### 3.3 Known limitations

|

||||

|

||||

MinIO Gateway has the following limitations when used with GCS:

|

||||

|

||||

* It only supports read-only and write-only bucket policies at the bucket level; all other variations will return `API Not implemented`.

|

||||

* The `List Multipart Uploads` and `List Object parts` commands always return empty lists. Therefore, the client must store all of the parts that it has uploaded and use that information when invoking the `_Complete Multipart Upload` command.

|

||||

|

||||

Other limitations:

|

||||

|

||||

* Bucket notification APIs are not supported.

|

||||

|

||||

## 4. Explore Further

|

||||

|

||||

* [`mc` command-line interface](https://docs.min.io/docs/minio-client-quickstart-guide)

|

||||

* [`aws` command-line interface](https://docs.min.io/docs/aws-cli-with-minio)

|

||||

* [`minio-go` Go SDK](https://docs.min.io/docs/golang-client-quickstart-guide)

|

||||

@@ -1,126 +0,0 @@

|

||||

# MinIO HDFS Gateway [](https://slack.minio.io)

|

||||

|

||||

MinIO HDFS gateway adds Amazon S3 API support to Hadoop HDFS filesystem. Applications can use both the S3 and file APIs concurrently without requiring any data migration. Since the gateway is stateless and shared-nothing, you may elastically provision as many MinIO instances as needed to distribute the load.

|

||||

|

||||

> NOTE: Intention of this gateway implementation it to make it easy to migrate your existing data on HDFS clusters to MinIO clusters using standard tools like `mc` or `aws-cli`, if the goal is to use HDFS perpetually we recommend that HDFS should be used directly for all write operations.

|

||||

|

||||

## Support

|

||||

|

||||

Gateway implementations are frozen and are not accepting any new features. Please reports any bugs at <https://github.com/minio/minio/issues> . If you are an existing customer please login to <https://subnet.min.io> for production support.

|

||||

|

||||

## Run MinIO Gateway for HDFS Storage

|

||||

|

||||

### Using Binary

|

||||

|

||||

Namenode information is obtained by reading `core-site.xml` automatically from your hadoop environment variables *$HADOOP_HOME*

|

||||

|

||||

```

|

||||

export MINIO_ROOT_USER=minio

|

||||

export MINIO_ROOT_PASSWORD=minio123

|

||||

minio gateway hdfs

|

||||

```

|

||||

|

||||

You can also override the namenode endpoint as shown below.

|

||||

|

||||

```

|

||||

export MINIO_ROOT_USER=minio

|

||||

export MINIO_ROOT_PASSWORD=minio123

|

||||

minio gateway hdfs hdfs://namenode:8200

|

||||

```

|

||||

|

||||

### Using Docker

|

||||

|

||||

Using docker is experimental, most Hadoop environments are not dockerized and may require additional steps in getting this to work properly. You are better off just using the binary in this situation.

|

||||

|

||||

```

|

||||

podman run \

|

||||

-p 9000:9000 \

|

||||

-p 9001:9001 \

|

||||

--name hdfs-s3 \

|

||||

-e "MINIO_ROOT_USER=minio" \

|

||||

-e "MINIO_ROOT_PASSWORD=minio123" \

|

||||

quay.io/minio/minio gateway hdfs hdfs://namenode:8200 --console-address ":9001"

|

||||

```

|

||||

|

||||

### Setup Kerberos

|

||||

|

||||

MinIO supports two kerberos authentication methods, keytab and ccache.

|

||||

|

||||

To enable kerberos authentication, you need to set `hadoop.security.authentication=kerberos` in the HDFS config file.

|

||||

|

||||

```xml

|

||||

<property>

|

||||

<name>hadoop.security.authentication</name>

|

||||

<value>kerberos</value>

|

||||

</property>

|

||||

```

|

||||

|

||||

MinIO will load `krb5.conf` from environment variable `KRB5_CONFIG` or default location `/etc/krb5.conf`.

|

||||

|

||||

```sh

|

||||

export KRB5_CONFIG=/path/to/krb5.conf

|

||||

```

|

||||

|

||||

If you want MinIO to use ccache for authentication, set environment variable `KRB5CCNAME` to the credential cache file path,

|

||||

or MinIO will use the default location `/tmp/krb5cc_%{uid}`.

|

||||

|

||||

```sh

|

||||

export KRB5CCNAME=/path/to/krb5cc

|

||||

```

|

||||

|

||||

If you prefer to use keytab, with automatically renewal, you need to config three environment variables:

|

||||

|

||||

- `KRB5KEYTAB`: the location of keytab file

|

||||

- `KRB5USERNAME`: the username

|

||||

- `KRB5REALM`: the realm

|

||||

|

||||

Please note that the username is not principal name.

|

||||

|

||||

```sh

|

||||

export KRB5KEYTAB=/path/to/keytab

|

||||

export KRB5USERNAME=hdfs

|

||||

export KRB5REALM=REALM.COM

|

||||

```

|

||||

|

||||

## Test using MinIO Console

|

||||

|

||||

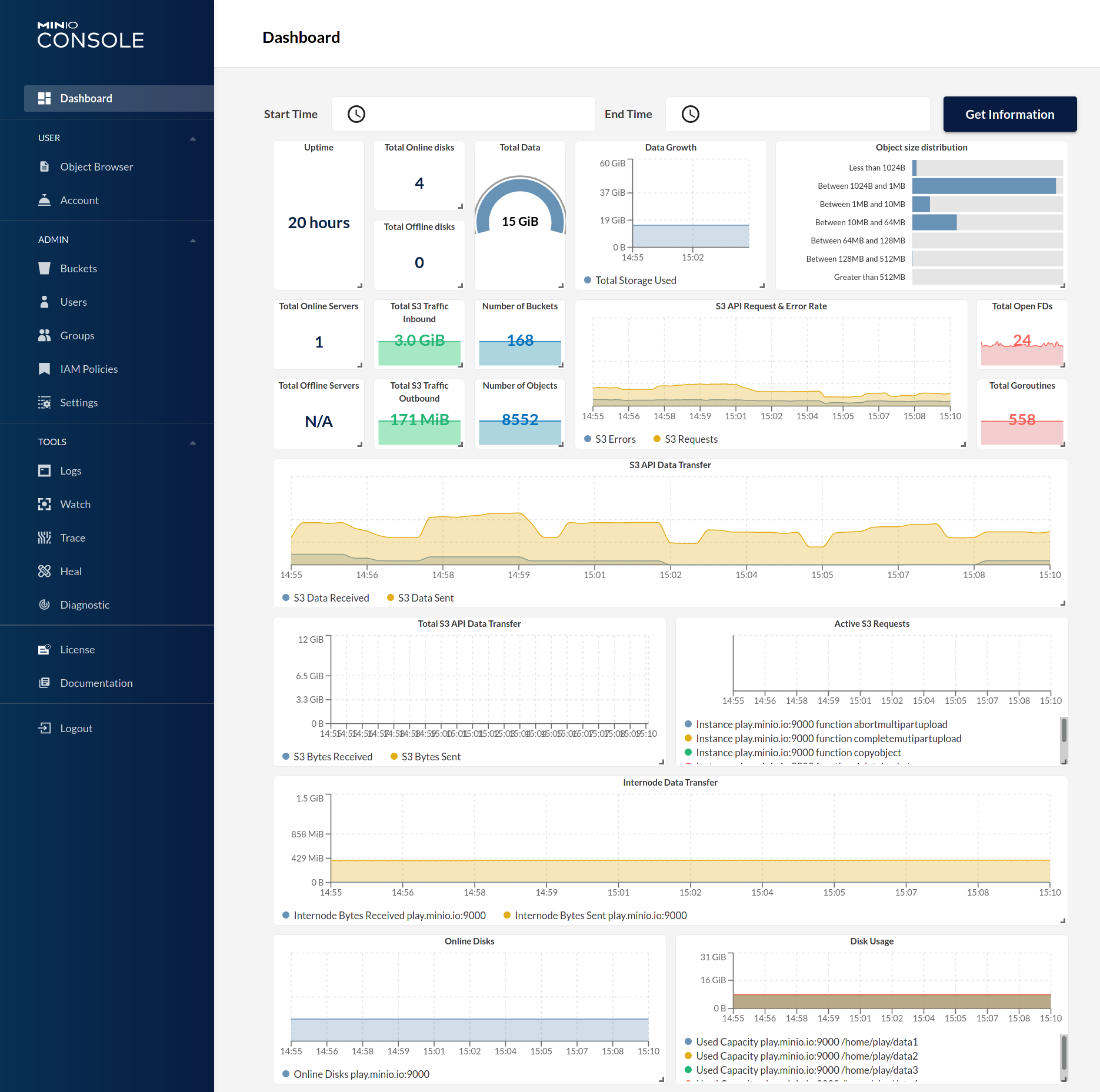

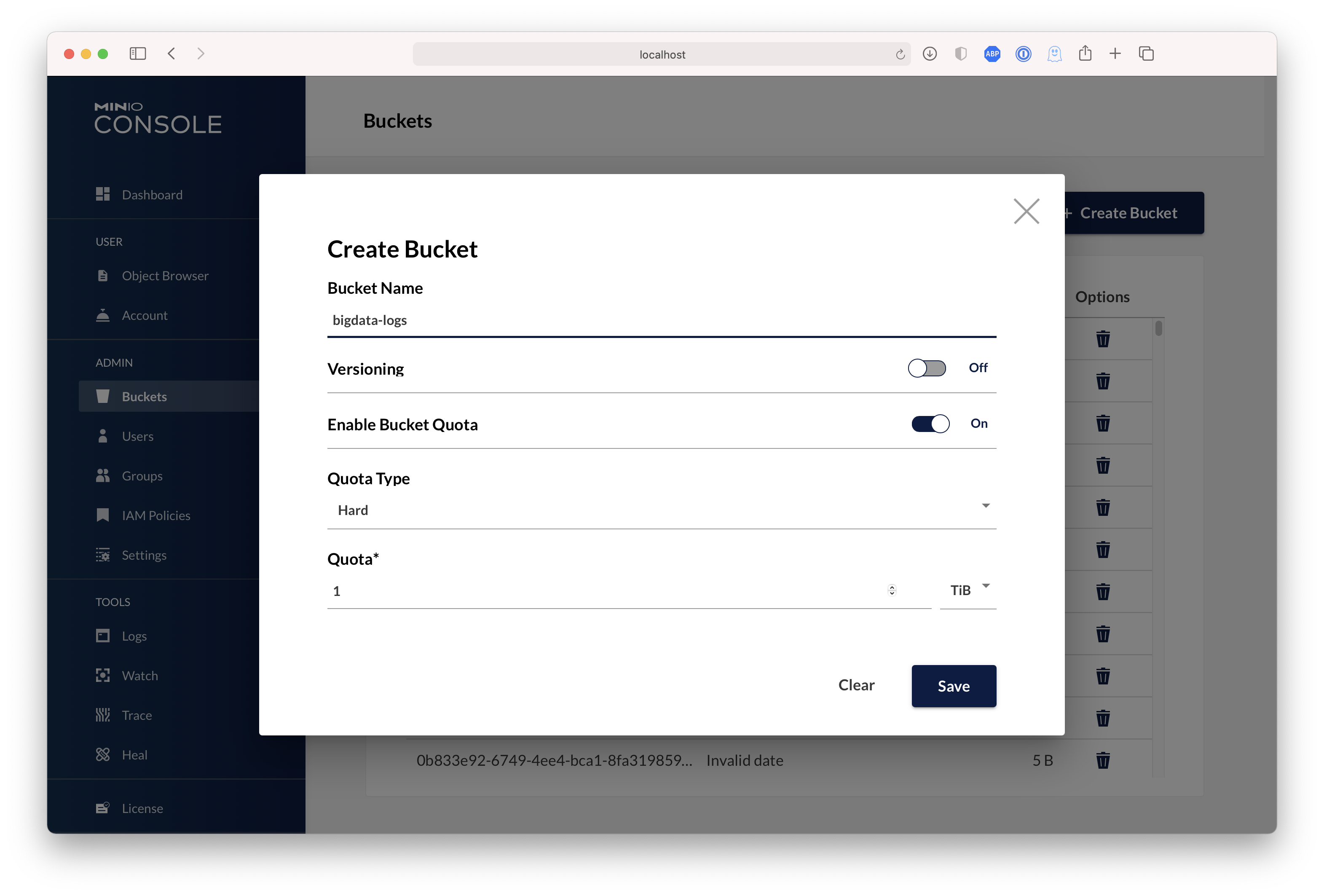

*MinIO gateway* comes with an embedded web based object browser. Point your web browser to <http://127.0.0.1:9000> to ensure that your server has started successfully.

|

||||

|

||||

| Dashboard | Creating a bucket |

|

||||

| ------------- | ------------- |

|

||||

|  |  |

|

||||

|

||||

## Test using MinIO Client `mc`

|

||||

|

||||

`mc` provides a modern alternative to UNIX commands such as ls, cat, cp, mirror, diff etc. It supports filesystems and Amazon S3 compatible cloud storage services.

|

||||

|

||||

### Configure `mc`

|

||||

|

||||

```

|

||||

mc alias set myhdfs http://gateway-ip:9000 access_key secret_key

|

||||

```

|

||||

|

||||

### List buckets on hdfs

|

||||

|

||||

```

|

||||

mc ls myhdfs

|

||||

[2017-02-22 01:50:43 PST] 0B user/

|

||||

[2017-02-26 21:43:51 PST] 0B datasets/

|

||||

[2017-02-26 22:10:11 PST] 0B assets/

|

||||

```

|

||||

|

||||

### Known limitations

|

||||

|

||||

Gateway inherits the following limitations of HDFS storage layer:

|

||||

|

||||

- No bucket policy support (HDFS has no such concept)

|

||||

- No bucket notification APIs are not supported (HDFS has no support for fsnotify)

|

||||

- No server side encryption support (Intentionally not implemented)

|

||||

- No server side compression support (Intentionally not implemented)

|

||||

- Concurrent multipart operations are not supported (HDFS lacks safe locking support, or poorly implemented)

|

||||

|

||||

## Explore Further

|

||||

|

||||

- [`mc` command-line interface](https://docs.minio.io/docs/minio-client-quickstart-guide)

|

||||

- [`aws` command-line interface](https://docs.minio.io/docs/aws-cli-with-minio)

|

||||

- [`minio-go` Go SDK](https://docs.minio.io/docs/golang-client-quickstart-guide)

|

||||

Reference in New Issue

Block a user