How to be more productive

Work Chronicles 06 07 2021

The post How to be more productive appeared first on Work Chronicles.

Software Engineering Manager (Clojure Team)

tl;dr

- Manager, or senior-level developer moving to management

- Direct software engineering for a growing ed-tech firm

- Functional programming experience a plus

- Remote (if desired), competitive salary, unbeatable health insurance, and generous PTO

What we're looking for

Senior-level developer looking for management experience, or experienced manager furthering his or her career.

Culturally, we tend to promote from within; for this position, however, we want you to introduce stronger programming standards, mentor younger developers, and generally help us reach bigger goals we have as a company.

What you will do

Banzai's development group consists of three Clojure devs, an architect, and two JavaScript devs, with one more front-end position currently open. You will shape the team according to your own vision; vet and hire future engineers; measure individual performance; and demonstrate how to hit company goals. You will lead by example.

We have two initiatives: create more complex types of interactive software for schools, banks, and credit unions; and complete a greenfield project for small- to mid-size businesses helping employers deepen their employees' understanding of their benefits.

While you will be a manager first, you may also contribute to the code: we believe great managers stay close to the problems their teams face daily. You will report to the Head of Product.

What we expect

You have well-developed opinions about leading people, organizing processes, and writing software.

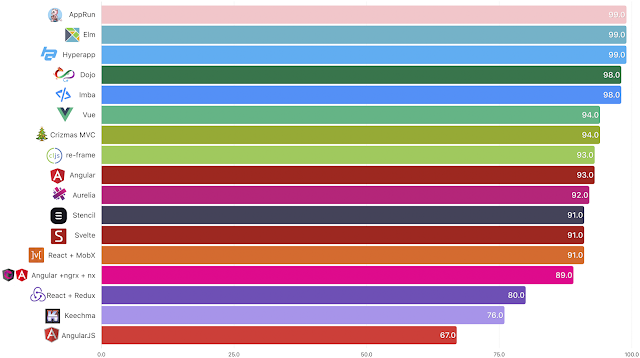

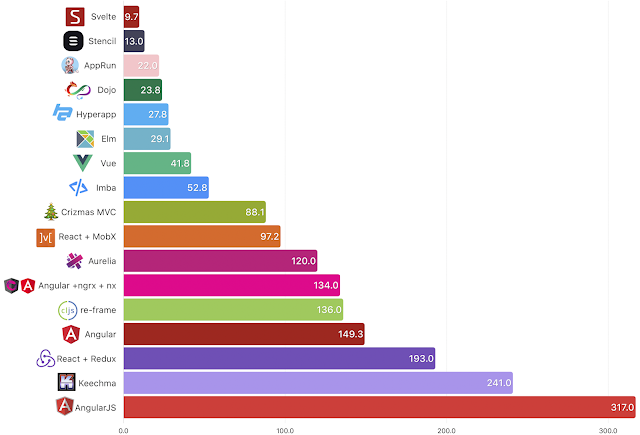

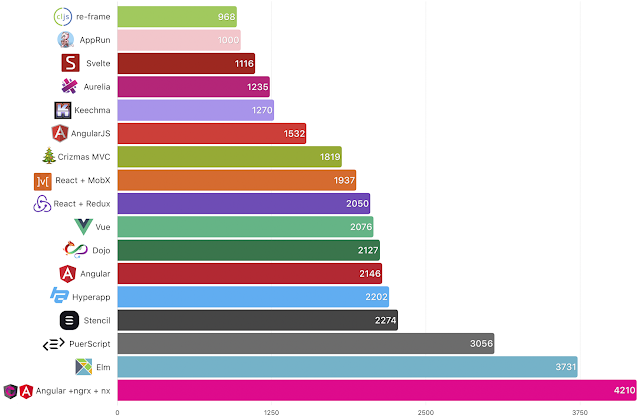

Our front-end stack is built on JavaScript, with a Clojure backend. While you do not need Clojure experience, familiarity with innovative tooling native to functional programming is a plus (e.g. REPL-driven development, React, Vue, Elm, RxJS, Elixir, F#, etc.).

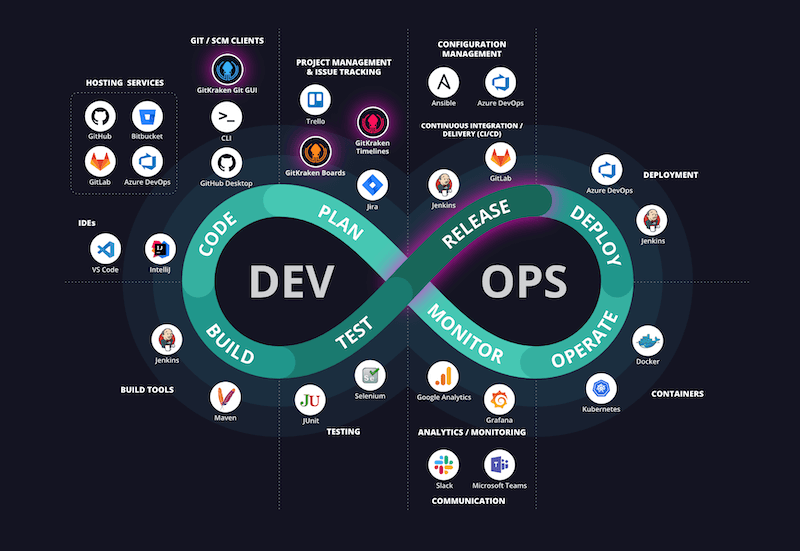

You have a deep understanding of programming for the web, including monitoring performance, deploying, testing, reviewing code, and generally helping us understand what's required of a company with more developers than we have.

Our architecture

Our software architecture is based around a monolithic repository with several application and worker subsystems running on a PaaS. Three of our web applications are SPAs, and two are server-driven templating engines. The Clojure code has more than 50% test coverage. We use a host of caching mechanisms to help keep the site running at or below 50ms at the 95th percentile.

We hope you will find new ways to help us improve the architecture for the sake of the business.

What we're offering

Our goal is to make sure you're not worried about benefits:

Our product team offers a competitive salary, reviewed every six months. We don't like offer wars, and you shouldn't have to switch jobs for a raise. Every six months we'll discuss your pay in a simple performance review. It's common for employees to get raises at each review, assuming the company is growing and you're regularly hitting expectations.

You may work remotely or join us at our office.

Best of all we offer full-health care coverage. We cover ~95% of your insurance premium, and all of your out-of-pocket expenses via a reimbursable account. You will get access to a Flexible Spending Account (FSA), letting you purchase things like eyeglasses and over-the-counter medications tax free.

Paid time off (PTO) is very flexible. We recommend three business weeks per year, in addition to a generous holiday schedule. There is, however, no technical limit inasmuch as it is approved with your manager and you're regularly hitting company goals.

We offer a 401(k) with a generous 5% match, with a wide variety of investment options. Half of your employer contributions will vest in one year, the remainder in two.

You will be eligible for company stock options. The options plan is simple and straightforward, with a four-year vesting schedule. When your options vest, you will have the opportunity to buy them and become an owner of company stock, if you choose.

You will be set you up with a phone and company-paid service.

Additional benefits include life and disability insurance, food and drinks on company premises, and other fringes. Should you become a parent (either for the first time or again!), you will have access to plenty of paid leave.

Finally, while strong benefits are essential to Banzai's compensation package, more importantly, you will have varied and interesting opportunities to grow in your careerand assume meaningful responsibility. You will be expected to learn new things on your own, but we also make time for training.

Tell me about the company

Banzai is an independent, owner-operated technology company dedicated to helping people become wise stewards of their finances through online solutions and local partnerships. We employ over 50 professionals in management, sales, sponsor relations, support, public relations, and product development.

Owner operated

Banzai has been in business for nearly 15 years, and the company's co-founders continue to lead it today. While we are aggressively growth-minded, the company is profitable, self-sustaining, and it requires neither debt nor venture funding.

Banzai is unique in the technology industry---our mindset is long-term. No unrealistic growth targets; no investor drama; no chasing unicorns. We aim for steady, reliable growth that compounds over time, making the company a more lucrative, happier place for employees in the long run.

Oh, and you can be an owner too: every full-time employee receives a stock option grant.

- www.teachbanzai.com

- Located @ 2230 N. University Pkwy., Bldg. 14, Provo Utah

Tell me about the culture

Banzai's culture marries a high level of trust and flexibility with equally high levels of responsibility. We place important jobs on our employees' shoulders, even juniors. For leadership, we tend to promote from within.

We ask you to bring your best self to work.

Meeting these expectations also earns you a great deal of freedom. At Banzai we don't count hours, nor do we set your schedule. (Although it is generally expected that you will work 40 hours per week.) Your personal time is your own; there's plenty of space in the day to take breaks and run errands; and our holiday schedule and PTO policy is generous. Fire drills and late nights are rare.

How do I apply?

We want to see what you've accomplished. Please send a portfolio and cover letter explaining why you're a great fit to Kendall Buchanan, CTO, kendall [at] teachbanzai.com.

IV Mar i jazz

AU Agenda 06 07 2021

La entrada IV Mar i jazz aparece primero en AU Agenda.

PARC DR. LLUCH marijazz.es Ara més que mai necessitem música, oci i cultura, i millor si és en un espai obert. D’aqueixa necessitat nasqueren les Jam Sesions en 27 Amigos, un bar insígnia del barri del Cabanyal que ara, amb recolzament de l’Ajuntament de València, Sedajazz i l’Associació de Comerciants del Marítim, ens porta Mar […]

La entrada IV Mar i jazz aparece primero en AU Agenda.

XI Russafa Escència

AU Agenda 06 07 2021

La entrada XI Russafa Escència aparece primero en AU Agenda.

russafaescenica.com El festival Russafa Escènica se expande como una gota de aceite, ahora con un proyecto de residencias artísticas en diferentes municipios de la provincia llamado Via Escènica. Al cierre de la edición de esta revista, el festival estaba en pleno proceso de selección de los viveros (30 min.) y los bosques (60 min.) de […]

La entrada XI Russafa Escència aparece primero en AU Agenda.

La entrada 31è MIM aparece primero en AU Agenda.

mimsueca.com Torna la Mostra Internacional de MIM de Sueca aquest setembre amb companyies de Portugal, Brasil, Xile, Alemanya, França i Regne Unit, sense por a les fronteres. De les illes britàniques venen Gandini Juggling per presentar 4×4: Ephemeral architectures, on quatre malabaristes i quatre ballarins de ballet comparteixen escenari. Francesos son Cie. Bivouac, que alternen […]

Bürstner Club

AU Agenda 06 07 2021

La entrada Bürstner Club aparece primero en AU Agenda.

ESPACIO INESTABLE. Aparisi i Guijarro, 7 Dels altres es una joven compañía valenciana fundada en el año 2020 y dirigida por Eleonora Gronchi i Pablo Meneu para darle una vuelta al circo contemporáneo. Han creado piezas como Staged para la compañía Circumference (galardonada con el Total TheatreAward del Festival Fringe de Edinburgo 2019 como mejor espectáculo de […]

La entrada Bürstner Club aparece primero en AU Agenda.

Espaldas de plata

AU Agenda 06 07 2021

La entrada Espaldas de plata aparece primero en AU Agenda.

SALA ULTRAMAR. Alzira, 9 De veritat en Àfrica s’escolten tambors en la llunyania, a totes hores, com en els sons d’ambient insufribles dels zoos? Walter no ho sap, mai ha estat allí. En l’agència de publicitat en la qual treballa té l’“oportunitat” d’acceptar un encàrrec per a un home, un polític a qui detesta, però […]

La entrada Espaldas de plata aparece primero en AU Agenda.

Señora de rojo sobre fondo gris

AU Agenda 06 07 2021

La entrada Señora de rojo sobre fondo gris aparece primero en AU Agenda.

TEATRO OLYMPIA. Sant Vicent Màrtir, 44 Delibes y José Sacristán, una fórmula que ya ha demostrado funcionar a la perfección en este soliloquio dirigido por José Sámano. Un pintor con una carrera dilatada, Nicolás, padece una importante crisis existencial, su creatividad se ha esfumado tras la muerte de su mujer. El fondo gris que imprima […]

La entrada Señora de rojo sobre fondo gris aparece primero en AU Agenda.

V Cicle Escèniques LGTBI

AU Agenda 06 07 2021

La entrada V Cicle Escèniques LGTBI aparece primero en AU Agenda.

CARME TEATRE. Gregori Gea, 6 Dansa de València, Madrid, Galícia i Canàries és el que ofereix la cinquena edició del Cicle Escèniques LGTBI del Carme Teatre, on el plat primícia arriba en forma d’entrant. Arrenca la mostra amb l’estrena absoluta de Nus-altres possibles del valencià Javier J. Hedrosa [2-5], un habitual de La Coja Dansa […]

La entrada V Cicle Escèniques LGTBI aparece primero en AU Agenda.

La entrada Maixabel aparece primero en AU Agenda.

Icíar Bollaín · España · 2021 · Guion: Icíar Bollaín e Isa Campo · Intérpretes: Blanca Portillo, Luis Tosar, Bruno Sevilla… Reparto de lujo con dos grandes de la interpretación en España como Blanca Portillo y Luis Tosar. Maixabel cuenta la historia de Maixabel Lasa, mujer del político Juan María Jaúregui, asesinado por ETA en el […]

Cuestión de sangre

AU Agenda 06 07 2021

La entrada Cuestión de sangre aparece primero en AU Agenda.

Tom McCarthy · USA · 2021 · Guión: Thomas Bidegain, Noé Debré, Marcus Hinchey y Tom McCarthy · Intérpretes: Matt Damon, Abigail Breslin, Camille Cottin… El realizador de la galardonada Spotlight regresa con este largometraje que transita los códigos del thriller y el drama político y social. En Cuestión de sangre, Matt Damon interpreta a […]

La entrada Cuestión de sangre aparece primero en AU Agenda.

La entrada BABii aparece primero en AU Agenda.

CENTRE DEL CARME. Museu, 2 Escoltar MiiRROR, l’àlbum de la talentosa artista multidisciplinària britànica BABii, és endinsar-se en l’atmosfera vaporosa i etèria d’un pop electrònic i garage, és evolucionar en el cor d’una realitat paral·lela que explora les emocions íntimes i els dimonis interiors de la seua creadora. BABii, en una estètica alienadora do it […]

An Hour About… Psuedo.com

The History of the Web 06 07 2021

Pseudo.com is a forgotten relic of the dot-com era. Was it ahead of its time? A moonshot that went too far? Or simply a piece of elaborate performance art?

The post An Hour About… Psuedo.com appeared first on The History of the Web.

CUÑADOS

Cinestudio d'Or 04 07 2021

del 5 al 11 de julio

17:15h. 20:55h. versión doblada / digital

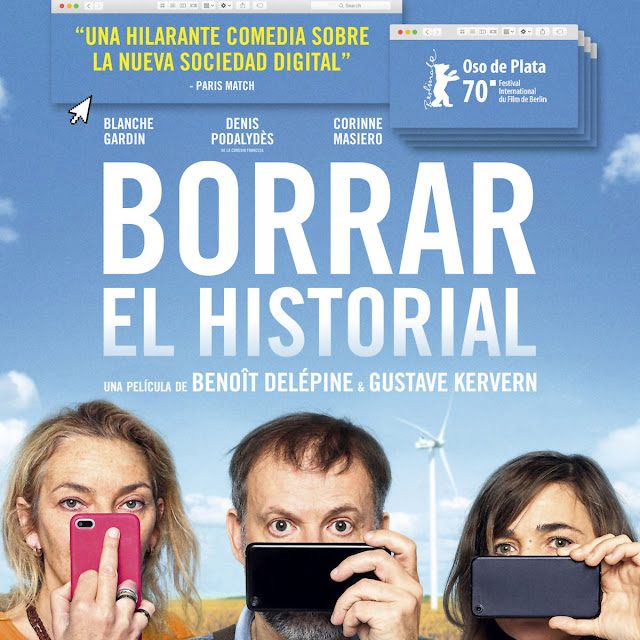

BORRAR EL HISTORIAL

Cinestudio d'Or 04 07 2021

del 5 al 11 de julio

19:00h. versión doblada / digital

UNA JOVEN PROMETEDORA

Cinestudio d'Or 04 07 2021

Radar verano mix Parte 1

República Web 03 07 2021

Debido a un error de grabación la pista de Antony no está disponible. Esta primera parte es una versión editada con las partes de Antony (que no salen en la mezcla). Intentaremos que Antony grabe sus enlaces por separado para la parte 2.

Despedimos temporada 4 del podcast con un episodio cargado de enlaces de interés, salpicados eso sí, con nuestras anotaciones personales. Como es habitual en la sección Radar, este episodio recoge recursos y herramientas que nos parecen valiosas para nuestro trabajo. En esta primera parte se incluyen las recomendaciones recopiladas por Javier y Andros. También al inicio del podcast David Vaquero hace un valoración del Congreso Eslibre que se celebró la semana pasada, y que tuvo al propio David participando como colaborador y dando un taller. Andros también participó ofreciendo una charla sobre el desarrollo de Glosa, su solución personal para agregar comentarios en un sitio web estático.

Visita la web del podcast donde encontrarás los enlaces de interés discutidos en el episodio. Estaremos encantados de recibir vuestros comentarios y reacciones.

Nos podéis encontrar en:

- Web: republicaweb.es

- Canal Telegram: t.me/republicaweb

- Grupo Telegram Malditos Webmasters

- Twitter: @republicawebes

- Facebook: https://www.facebook.com/republicaweb

¡Contribuye a este podcast!. A través de la plataforma Buy me a coffee puedes realizar una mínima aportación desde 3€ que ayude a sostener a este podcast. Tú eliges el importe y si deseas un pago único o recurrente. ¡Muchas gracias!

RUEGA POR NOSOTROS

Cinestudio d'Or 03 07 2021

Greatest weakness

Work Chronicles 03 07 2021

The post Greatest weakness appeared first on Work Chronicles.

Clojure Deref (July 2, 2021)

Clojure News 02 07 2021

Welcome to the Clojure Deref! This is a weekly link/news roundup for the Clojure ecosystem. (@ClojureDeref RSS)

Highlights

Clojurists Together announced a more varied set of funding models moving forward to better match what projects have been seeking.

All of the clojureD 2021 videos are now available, including my video discussing a set of new Clojure CLI features and the tools.build library. We have been hard at work polishing documentation and finalizing a last few bits of the source prep functionality and we expect it will be available soon for you to work with! For now, the video is a good overview of what’s coming: expanded source for source-based libs, a new tools.build library, and some extensions to tool support in the Clojure CLI.

In the core

We have mostly been working on Clojure CLI and tools.build lately but these items went by this week, maybe of interest:

-

Does Clojure still have rooms to improve at compiler level? - some discussion at ClojureVerse

-

CLJ-2637 - Automatic argument conversion to Functional Interface (Lambda) from Clojure fn - this patch was proposed to do automatic SAM conversion for Clojure functions in the compiler.

This is an area we’ve actually spent a lot of time thinking about for Clojure 1.11, (tracking under CLJ-2365 although most of the work has happened off ticket). In particular we have talked about a long list of possible use cases for functional interop and also a long list of ideas for making functional interop less cumbersome, both syntax and implementation. The examples given in CLJ-2637 are primarily about the Java Stream API but we don’t think that’s particularly high on the list of what’s interesting (if you’re in Clojure, just use Clojure’s apis!). But there are cases where you have Java APIs in the JDK or elsewhere that now take one of the SAM-style interfaces, or a java.function interface and it would be nice to reduce the friction in passing a Clojure function without needing to reify - either by automatic detection and conversion, or helper fns, or even new syntax and compiler support. No conclusions yet.

Podcasts and videos

-

Apropos - Mia, Mike, Ray, and Eric chat about Clojure

Blogs, discussions, tutorials

-

Clojure Morsels - a new biweekly mailing list for Clojure news starting soon

-

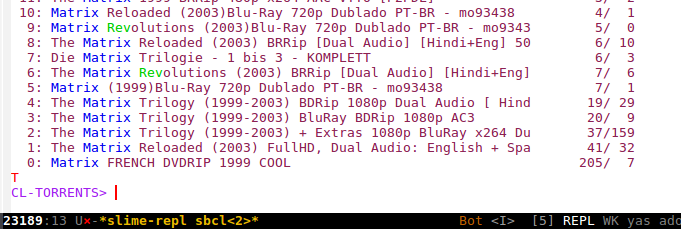

REPL vs CLI: IDE wars - Vlad thinks about REPLs vs the command line for dev

-

Clojure Building Blocks - Gustavo Santos

-

Getting Started with Clojure - Gustavo Santos

-

Rich Comment Blocks - Thomas Mattacchione

Training and hiring

-

Learn Datomic - is a new course for learning Datomic and Datalog by Jacek Schae, coming soon!

-

Who’s Hiring - monthly hiring thread on Clojure subreddit

Libraries and tools

Some interesting library and tool updates and posts this week:

-

PCP - Clojure replacement for PHP

-

holy-lambda 0.2.2 - A micro-framework that integrates Clojure with AWS Lambda on either Java, Clojure Native, or Babashka runtime

-

clojure-lsp 2021.07.01-13.46.18 - Language Server (LSP) for Clojure, this release with new API/CLI support!

-

clojureflare - a new ClojureScript lib for using Cloudflare workers

-

Calva 2.0.202 - Clojure & ClojureScript in Visual Studio Code

Speed of execution

Work Chronicles 01 07 2021

The post Speed of execution appeared first on Work Chronicles.

No sin mis cookies 01 07 2021

Si hasta 2020 el SEO local era importante, ahora toma el carácter de plenamente imprescindible. Si competir ya es de por sí difícil, hacerlo con tus vecinos de localidad es todo un desafío.

The post appeared first on No sin mis cookies.

667

Extra Ordinary 30 06 2021

Expertise

Work Chronicles 30 06 2021

The post Expertise appeared first on Work Chronicles.

¿Quieres vender en la Apple Store? Lo siento pero tenemos que hablar.

Cuando decides poner a la venta algún elemento dentro de un App no todas las ganancias van a tu bolsillo. Dependiendo de ciertas variables Apple se llevará un porcentaje por cada transacción, que puede ser de un 0% (ninguna), 15% o un 30%.

Un ejemplo rápido. Acabas de publicar un App de cocina donde ofreces deliciosas recetas veganas para perros. Decides que la vas a monetizar por media de una suscripción a un precio de 10 euros al mes. Solamente quienes paguen podrán visualizar las recetas completas con todos sus pasos; el resto únicamente disfrutaran del primer paso. En este caso de cada suscripción a ti te llegará 7 euros (un 70%) mientras que Apple se quedará una comisión de 3 euros (un 30%). Y esta situación ser repetirá en cada usuario y renovación.

Existen 2 servicios que no debes confundir, ya que su uso es completamente diferente a pesar que ambos gestionen dinero.

-

Apple Pay: Implementación para hacer pagos en una web o App por medio de una tarjeta que ha sido vinculada con la cartera de Apple.

-

In-App Purchase: Implementación para realizar pagos dentro de un App. Orientado a ofrecer contenido como: productos digitales, suscripciones y contenido premium.

El último servicio es el que nos interesa. Dentro podremos encontrar 3 subcategorías de posibles pagos:

- Consumibles: como gemas en un videojuego o incrementar la visibilidad de un perfil temporalmente en un red de citas.

- No consumibles: características premium que son compradas en una ocasión y no expiran.

- Suscripciones: características premium o acceso a contenido a través de un pago recurrente. Cuando el usuario decide cancelar el siguiente pago, estas características dejan de ser accesibles transcurrido el periodo pagado.

Es importante que sepas donde encaja el App en estas subcategorías porque las comisiones cambiarán.

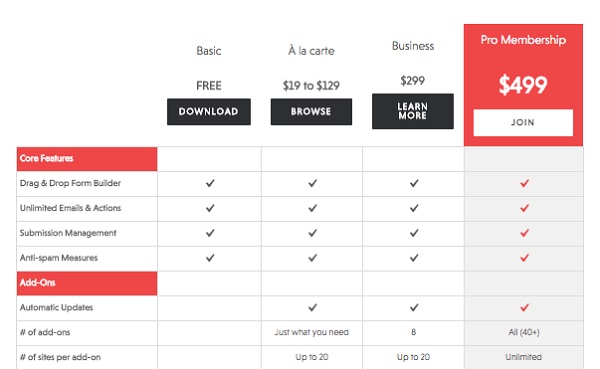

Comisiones

- El App es gratis en tienda, no se vende nada: 0%.

- El App es gratis en tienda pero posee anuncios: 0%.

- El App es gratis en tienda pero se vende productos o servicios físicos: 0%.

- El App es gratis en tienda aunque puedes pagar por consumibles: 30%.

- El App es de pago, no se vende nada: 30%.

- El App es gratis en tienda aunque puedes pagar una suscripción: 30%, aunque después del primer año baja a 15%.

- Existe pagos o suscripciones fuera del App: 0%, sin embargo no debe existir ningún enlace o referencia al lugar donde se pueden realizar los pagos.

- Existen pagos o suscripciones fuera del App con posibilidad de hacerlo también dentro de un dispositivo Apple: 0% si no es en el ecosistema de Apple, dentro del App se debe pagar las comisiones anteriormente mencionadas.

Tabla de precios disponibles

Los precios no los eliges libremente, Apple te da una tabla de posibilidades que si o si debes seleccionar la opción que más se ajuste con tu negocio. Te aviso que las cantidades a percibir varían dependiendo del país. Puede darse el caso que marques 10 euros, pero en un lugar como la India se venda por 4 euros.

Otras comisiones

No olvides que independientemente de Apple debes integrar una pasarela de pago. Alguien debe gestionar el movimiento de dinero entre la tarjeta del cliente y tu cuenta bancaria. Corre por tu cuenta. Las más populares son las siguientes.

- Stripe: Se lleva una comisión de 1,4 % + 0,25 € para tarjetas europeas y 2,9 % + 0,25 € para tarjetas no europeas.

- Paypal: Se lleva una comisión de 2,8 % + una tarifa fija dependiendo de la moneda.

Sin olvidar el pago anual de Apple Developer Program, que son 100 dolares. Es el precio para que tu App esté accesible en la tienda. En caso de no pagarlo sería retirada.

Y por último los impuestos locales de cada país. Pero esto es inabarcable de explicar en un artículo de blog.

Conclusión

Para publicar un App en la Apple Store debemos tener en cuenta las siguientes comisiones.

- Apple Developer Program: 100 dolares anuales.

- In-App Purchase: Entre 0% al 30%.

- Pasarela de pagos: Los mencionados rondan de entre 1,4% al 2.9% + una tarifa fija.

- Reducción de precio en algunos países.

- Impuestos locales.

Aún así, sigue siendo la plataforma más rentable dentro del desarrollo de Apps.

Más información

Todas las funciones: In‑App Purchase

Comisiones: Principales prácticas

Follow Through

Work Chronicles 28 06 2021

The post Follow Through appeared first on Work Chronicles.

Tecnología GPON - FTTH

Blog elhacker.NET 28 06 2021

Demystifying styled-components

Josh Comeau's blog 27 06 2021

Wonder Why

Work Chronicles 25 06 2021

The post Wonder Why appeared first on Work Chronicles.

Clojure Deref (June 25, 2021)

Clojure News 25 06 2021

Welcome to the Clojure Deref! This is a weekly link/news roundup for the Clojure ecosystem. (@ClojureDeref RSS)

Highlights

It is common to see complaints that both Clojure jobs and Clojure developers are hard to find. The real truth is: both exist, but there is sometimes a mismatch in either experience or geographic distribution. We don’t typically highlight jobs in the Deref but here are some great places to find Clojure jobs:

-

Brave Clojure - job board

-

Functional Works - job board

-

Clojurians slack - #jobs and #remote-jobs channel

-

Clojure subreddit - monthly thread

-

Who is hiring - search at HackerNews

Also, I want to highlight that clojureD 2021 conference videos are coming out now, about one per day, check them out!

Sponsorship spotlight

Over the last couple years, the Calva team has been putting a ton of effort into making VS Code a great place to Clojure. If you enjoy the fruits of that effort, consider supporting one of these fine folks working in this area:

-

Peter Strömberg - sponsor for Calva

-

Brandon Ringe - sponsor for Calva

-

Eric Dallo - sponsor for clojure-lsp

Podcasts and videos

-

CaSE - Conversations about Software Engineering talks with Eric Normand

-

ClojureScript podcast - Jacek Schae interviews Howard Lewis Ship

-

Apropos - Mia, Mike, Ray, Eric chat plus special guest Martin Kavalar

Blogs, discussions, tutorials

-

Open and Closed Systems with Clojure - Daniel Gregoire

-

What is simplicity in programming and why does it matter? - Jakub Holý

-

Counterfactuals are not Causality - Michael Nygard - not about Clojure but worth a read!

-

How I’m learning Clojure - Rob Haisfield

-

Clojure metadata - Roman Ostash

-

Data notation in Clojure - Roman Ostash

-

Specific vs. general: Which is better? - Jakub Holý

Libraries

Some interesting library updates and posts this week:

-

spock 0.1.1 - a Prolog in Clojure

-

recife 0.3.0 - model checker library in Clojure

-

datascript 1.2.1 - immutable in-memory database and Datalog query engine

-

sparql-endpoint 0.1.2 - utilities for interfacing with SPARQL 1.1 endpoints

-

pulumi-cljs - ClojureScript wrapper for Pulumi’s infrastructure as code Node API

-

c4k-keycloak - k8s deployment for keycloak

-

clj-statecharts 0.1.0 - State Machine and StateCharts for Clojure(Script)

-

clojure-lsp 2021.06.24-14.24.11 - Language Server (LSP) for Clojure

-

tick 0.4.32 - Time as a value

-

aws-api 0.8.515 - programmatic access to AWS services from Clojure

Tools

-

Clojure LibHunt - find Clojure open source projects!

-

syncretism - options search engine based on Yahoo! Finance market data

-

mastodon-bot - bot for mirroring Twitter/Tumblr accounts and RSS feeds on Mastodon

Fun and Games

Chris Ford did a live coding performance (on keytar!) - see the code

Throwback Friday (I know, I’m doing it wrong)

In this recurring segment, we harken back to talks from an older time of yore. This week, we’re featuring:

-

How to Think about Parallel Programming: Not! by Guy L. Steele Jr from Strange Loop 2010 - it’s a decade+ old but still worth watching for how we think about what languages should provide, and a particular inspiration to the later design of Clojure reducers

-

Advent of Code 2020, Day 17 by Zach Tellman - a wonderful example of how to work in Clojure. write code in your editor, eval small exprs to your REPL, building iteratively up to a final solution

Clojure Engineer (Remote)

Brave Clojure Jobs 24 06 2021

Clojure Engineer (Remote)

Build the infrastructure powering our automated portfolio management platform!

Composer is a no-code platform for automated investment management. Composer allows you to build, test, deploy, and manage automated investing strategies - all without writing a line of code.

As an early backend engineer at Composer you will:

- Be responsible for designing and building critical pieces of our infrastructure

- Work closely with the executive team to guide our decisions regarding technical architecture

Projects you will work on:

- Creating a language that clients can use to define any conceivable trading strategy ("strategies as data")

- Determining the best way to collaborate on, share, and/or monetize strategies

- Allowing clients to develop custom logic to further personalize their strategies

- See here for more ideas!

We're looking for someone who:

- Loves Clojure! (Clojurescript a bonus)

- Has familiarity with cloud platforms (We use GCP)

- Will be a technical thought leader within the company

- Understands database design

- Makes educated decisions when faced with uncertainty

What's it like to work at Composer?

- We believe diverse perspectives are necessary if we aim to disrupt finance. To that end, we are an equal opportunity employer and welcome a wide array of backgrounds, experiences, and abilities.

- We believe the simplest solution is most likely the best one

- We encourage self-improvement and learning new skills

- We are venture-backed by top investors

- We are 100% remote :)

- We offer generous equity!

- Our Values

Nos podrían estar detectando

NeoFronteras 24 06 2021

I complete. You?

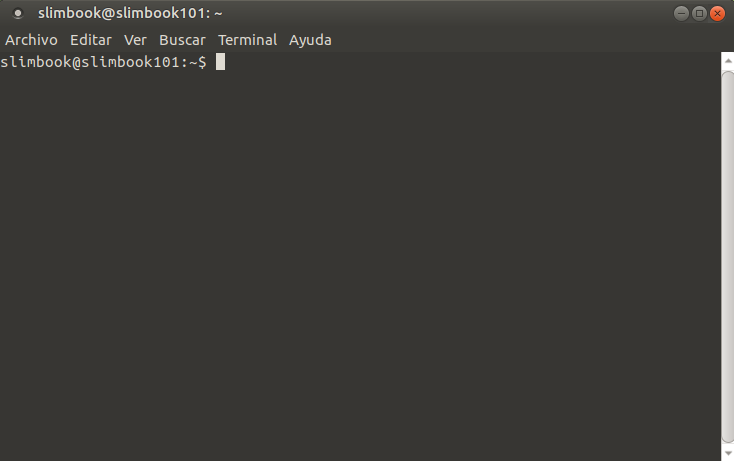

Manuel Uberti 24 06 2021

Tracking the Emacs development by building its master branch may not be a smooth experience for everyone, but for the average enthusiast is the perfect way to see their favourite tool evolve, trying experimental things, and reporting back to the core developers to help them. Sure, one has to deal with occasional build failures, but with Git at one’s service it’s always easy to reset to a working commit and find happiness again.

Recently a shiny new mode has been implemented on master:

icomplete-vertical-mode. Now, if you had the chance to read this blog in the

past you know that when it comes to candidate completion I am a gangsta jumping

back and forth among packages with excessive self-satisfaction. But you should

also already know that I like to use as many Emacs built-ins as possible. Hence,

I could not wait to give icomplete-vertical-mode a try.

Turning it on is trivial:

(icomplete-mode +1)

(add-hook 'icomplete-mode-hook #'icomplete-vertical-mode)

Since other completion systems have spoiled me, I prefer scrolling over the

rotating behaviour of the standard icomplete:

(setq icomplete-scroll t)

Furthermore, I always want to see the candidate list:

(setq icomplete-show-matches-on-no-input t)

This is pretty much it. I use icomplete-fido-backward-updir to move up one

directory and I have exit-minibuffer bound to C-j for convenience.

I have been using icomplete-vertical-mode daily for a while now and everything

has been working as expected. For the record, this mode works seamlessly with

your favourite completion-styles settings, so moving from, say, Vertico to

icomplete-vertical-mode is simple and easy.

I complete. You?

Manuel Uberti 24 06 2021

Tracking the Emacs development by building its master branch may not be a smooth experience for everyone, but for the average enthusiast is the perfect way to see their favourite tool evolve, trying experimental things, and reporting back to the core developers to help them. Sure, one has to deal with occasional build failures, but with Git at one’s service it’s always easy to reset to a working commit and find happiness again.

Recently a shiny new mode has been implemented on master:

icomplete-vertical-mode. Now, if you had the chance to read this blog in the

past you know that when it comes to candidate completion I am a gangsta jumping

back and forth among packages with excessive self-satisfaction. But you should

also already know that I like to use as many Emacs built-ins as possible. Hence,

I could not wait to give icomplete-vertical-mode a try.

Turning it on is trivial:

(icomplete-mode +1)

(add-hook 'icomplete-mode-hook #'icomplete-vertical-mode)

Since other completion systems have spoiled me, I prefer scrolling over the

rotating behaviour of the standard icomplete:

(setq icomplete-scroll t)

Furthermore, I always want to see the candidate list:

(setq icomplete-show-matches-on-no-input t)

This is pretty much it. I use icomplete-fido-backward-updir to move up one

directory and I have exit-minibuffer bound to C-j for convenience.

I have been using icomplete-vertical-mode daily for a while now and everything

has been working as expected. For the record, this mode works seamlessly with

your favourite completion-styles settings, so moving from, say, Vertico to

icomplete-vertical-mode is simple and easy.

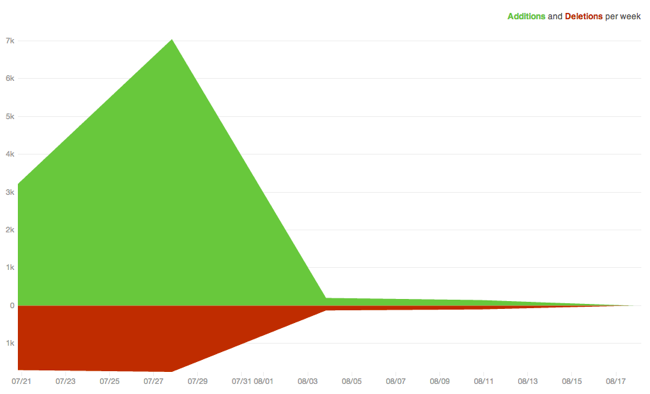

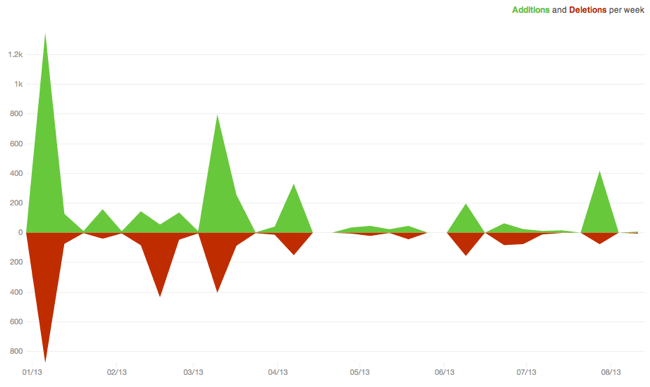

Introducing the new GitHub Issues

The GitHub Blog 23 06 2021

666

Extra Ordinary 23 06 2021

Don Clojure de la Mancha

Programador Web Valencia 22 06 2021

Próximamente será publicado el libro: Don Clojure de la Mancha. ¿Quieres ser el primero en enterarte? Tan solo debes dejar un comentario. El email que introduzcas no será visible y solo le daré uso para avisarte del momento y lugar. NO TE ESTAS SUSCRIBIENDO A UNA NEWSLETTER.

Gracias por apoyar al software libre, la programación funcional y la comunidad hispana de Clojure.

Custom Scrollbars In CSS

Ahmad Shadeed Blog 22 06 2021

Los blogs están a la orden del día. Cada vez son más las personas que ganan dinero con sus propios dominios, apostando por una idea de negocio innovadora. Si tú también has optado por la creación de un blog, estoy seguro de que sabes de lo que te hablo. Pero entonces ocurre el primer desencuentro: ... Read more

The post 5 errores de SEO que perjudican tu blog appeared first on No sin mis cookies.

Pandemic Progress

Stratechery by Ben Thompson 21 06 2021

Accountability

Work Chronicles 20 06 2021

The post Accountability appeared first on Work Chronicles.

Paraceratherium linxiaense

NeoFronteras 20 06 2021

En caso que intentes ordenar una consulta de la base de datos y no uses PosgreSQL como base de datos principal te vas a encontrar un pequeño problema: Cuando hay acentos no se ordena de una forma lógica. Si utilizas SQLite te lo habrás encontrado de frente. Por ejemplo, si yo tengo una tabla con nombres e intento ordenarlas con order_by pasaré de:

Zaragoza, Ávila, Murcia, Albacete...

al siguiente orden

Albacete, Murcia, Zaragoza, Ávila...

Las palabras con acento, con ñ u otros carácteres acabarán al final de la lista.

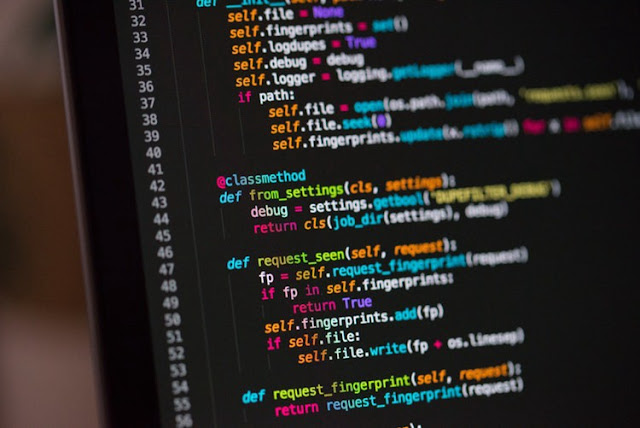

Para arreglarlo he creado la siguiente solución que debes copiarlo en el archivo donde necesites realizar el orden deseado.

import re

import functools

def order_ignore_accents(queryset, column):

"""Order Queryset ignoring accents"""

def remove_accents(raw_text):

"""Removes common accent characters."""

sustitutions = {

"[àáâãäå]": "a",

"[ÀÁÂÃÄÅ]": "A",

"[èéêë]": "e",

"[ÈÉÊË]": "E",

"[ìíîï]": "i",

"[ÌÍÎÏ]": "I",

"[òóôõö]": "o",

"[ÒÓÔÕÖ]": "O",

"[ùúûü]": "u",

"[ÙÚÛÜ]": "U",

"[ýÿ]": "y",

"[ÝŸ]": "Y",

"[ß]": "ss",

"[ñ]": "n",

"[Ñ]": "N",

}

return functools.reduce(

lambda text, key: re.sub(key, sustitutions[key], text),

sustitutions.keys(),

raw_text,

)

return sorted(queryset, key=lambda x: remove_accents(eval(f"x.{column}")))

Supongamos, por ejemplo, que necesitas ordenar unos pueblos o municipios. Lo que haría cualquier desarrollador es:

towns = Town.objects.all().order_by('name')

En su lugar, omitirás order_by y añadirás la consulta a la función order_ignore_accents. Y como segundo argumento la columna que quieres usar para ordenar. En este caso será name.

towns_order = order_ignore_accents(Town.objects.all(), 'name')

Se ordenará como esperamos.

Albacete, Ávila, Murcia, Zaragoza...

Honest Review

Work Chronicles 19 06 2021

The post Honest Review appeared first on Work Chronicles.

Vuelve Drupal al podcast y para esta ocasión contamos con la compañía del desarrollador web especializado en Drupal Borja Vicente, creador de la web y el canal escueladrupal.com Invitamos a Borja para que nos cuente muchas cosas sobre su proyecto y el estado actual de Drupal. Borja desarrolla en backend con Drupal desde hace más de 10 años y actualmente su labor profesional se desarrolla con Drupal, aunque suele también experimentar con Symfony, Laravel, Django y también Ruby on Rails. Además Borja también tiene publicado cursos especializados en Drupal a través de la plataforma Udemy.

Con Borja tratamos muchas cuestiones relacionados con Drupal y su proyecto de contenidos:

- Evolución de Drupal hasta su última versión

- Comunidad Drupal a nivel internacional y nacional

- Proyectos o casos de uso ideales para desarrollar con Drupal 9?

- Recomendaciones esenciales para gestionar una instalación de Drupal

- Motivaciones para crear Escuela Drupal y lo que gustaría conseguir.

- Entorno de desarrollo usado para Drupal.

- Módulos que más te ayudan al desarrollo web con Drupal.

Muy agradecidos a Manu por propiciar en Twitter este episodio sobre Drupal con Borja.

Visita la web del podcast donde encontrarás los enlaces de interés discutidos en el episodio. Estaremos encantados de recibir vuestros comentarios y reacciones.

Nos podéis encontrar en:

- Web: republicaweb.es

- Canal Telegram: t.me/republicaweb

- Grupo Telegram Malditos Webmasters

- Twitter: @republicawebes

- Facebook: https://www.facebook.com/republicaweb

¡Contribuye a este podcast!. A través de la plataforma Buy me a coffee puedes realizar una mínima aportación desde 3€ que ayude a sostener a este podcast. Tú eliges el importe y si deseas un pago único o recurrente. ¡Muchas gracias!

Senior Software Engineer (Clojure)

About Us

With ever-growing workloads on the cloud, companies face significant challenges in managing productivity and spending, and maximizing impact to their businesses. Compute Software is addressing a huge market opportunity to help companies make good business decisions and run optimally on the public cloud. We're building a powerful platform to give customers the tools they need to gain transparency, optimize their usage, and execute change across their organizations.

We're a small, distributed team, currently spanning California to Quebec, and we offer early stage market-rate compensation (including equity), health and dental insurance, and 401K benefits. You'll be joining a venture capital-backed, distributed team with ambitious goals, and you will have the ability to make a direct and lasting impact on the team and product.

Your Role

Be one of the earliest employees and join our early-stage engineering team as a Senior Software Engineer. You will be essential in shaping the features and functionality of our SaaS platform, culture and processes.

You'll spend your day enveloped in Clojure. The backend is written in Clojure and driven by data in Datomic and InfluxDB. The frontend is written in ClojureScript using re-frame and communicates with the backend using Pathom. We deploy to AWS Fargate and Datomic Ions.

For product development, we follow Shape Up. We use Notion, Slack, and ClubHouse.

What will you do at Compute Software?

- Write Clojure and ClojureScript.

- Design, build, and deploy features and bug fixes across the entire stack.

- Become an expert in all the nuances of the various cloud platforms like Amazon Web Services, Google Cloud, and Microsoft Azure.

- Provide product feedback and evaluate trade-offs to impact product direction.

- Debug production problems.

What We're Looking For Passion - you are excited about the large, high-growth cloud computing market and figuring out how to help customers, who are using cloud computing solutions today. You are excited by being one of the earliest employees and getting to work with a small team.

Engineering experience - you're a Clojure practitioner with 6+ years of professional experience. You know what it takes to create large-scale b2b software. You can create effective and simple solutions to problems quickly, and communicate your ideas clearly to your teammates.

Product-minded - you love building products and you care about the details of creating a great user experience. You have an interest in how users will use our platform and the impact we will have on them. You can balance your consideration of the product and user requirements with technical complexity and implementation details to make appropriate decisions when things are unclear.

Effective communication - you're great at communicating. If something is unclear you reach out and ask questions. You're comfortable owning, communicating and presenting information on specific projects or initiatives, both in writing and in person.

Organizational and project management - you are highly organized and able to self-manage projects in a fast-moving company. You are able to take high level goals and break them down into achievable steps.

Updated Debian 10: 10.10 released

Debian News 19 06 2021

buster). This point release mainly adds corrections for security issues, along with a few adjustments for serious problems. Security advisories have already been published separately and are referenced where available.

Turn on your cameras

Work Chronicles 18 06 2021

The post Turn on your cameras appeared first on Work Chronicles.

Clojure Deref (June 18, 2021)

Clojure News 18 06 2021

Welcome to the Clojure Deref! This is a periodic link/news roundup for the Clojure ecosystem. (@ClojureDeref RSS)

Highlights

-

HOPL IV (History of Programming Languages) at PLDI 2021 is happening on Monday and Tuesday and includes a talk from Rich Hickey about the History of Clojure paper. Registration is still available and the conference is online and features many other fine language developers!

-

The results are out from the JVM Ecosystem Report 2021 and Clojure continues to make a strong showing as one of the most popular JVM languages (other than Java), rising from 2.9% last year to 8.4% this year. Lots of other interesting tidbits in there as well.

Sponsorship Spotlight

Lately Christophe Grand and Baptiste Dupuch have been teasing their work on a new ClojureDart runtime with Flutter support. You can support their work on GitHub: cgrand dupuchba. Nubank (users of both Clojure and Flutter) are now supporting both!

Podcasts and videos

-

LispCast - Eric Normand talks about stratified design

-

defn - Vijay Kiran and Ray McDermott interview Paula Gearon

-

REPL-driven development - demo from Jakub Holý

Blogs, discussions, tutorials

-

Clojure Transducers - Joanne Cheng explains transducers

-

Clojure’s Destructuring - Daniel Gregoire dives into destructuring

-

Better performance with Java arrays in Clojure - Daw-Ran Liou on using Java arrays in Clojure

-

Backpressure - Kenny Tilton talks about core.async and ETL

-

Fun of clojure - wrap some code around data - @Sharas_ on the data ethos of Clojure

-

Lambda Island is Changing - Arne Brasseur and his merry band of Clojurists at Gaiwan are changing directions a bit

-

Should you adopt Clojure at your company? - Shivek Khurana, TLDR: yes! :)

Libraries and Tools

Some interesting library updates and posts this week:

-

ordnungsamt - a tool for running ad-hoc migrations over a code repository

-

clj-github - a library for working with the GitHub developer API

-

umschreiben-clj - extensions to rewrite-clj

-

Copilot - Tony Kay teased a new upcoming code analysis tool for Clojure and ClojureScript

-

scittle - Michiel Borkent did the first release of the SCI interpreter for use in script tags

-

clojure-lsp - Eric Dallo released a new version with enhanced path support for deps.edn projects

-

holy-lambda - Karol Wójcik released a new version

-

honeysql - Sean Corfield added :distinct syntax and some other features and fixes

-

Fulcro - Tony Kay released 3.5.0-RC1 with more support for non-React apps

-

refl - Michiel Borkent released a new example project to clean up reflection configs for GraalVM for Clojure projects

Fun and Games

-

Fidenza - Tyler Hobbs has a long history of doing interesting generative art with Clojure and he has published a rundown of his newest generative algorithm. Not explicitly Clojure but fascinating to read.

-

ClojureScript racing game - Ertuğrul Çetin published this game this week

Throwback Friday (I know, I’m doing it wrong)

In this recurring segment, we harken back to a talk from an older time to a favorite talk of yore. This week, we’re featuring:

-

Why is a Monad Like a Writing Desk? by Carin Meier from Clojure/West 2012

In this lovely story from 2012, Carin Meier talks about monads through the lens of Clojure and Alice in Wonderland.

Contaminación luminosa e insectos

NeoFronteras 17 06 2021

Virtual Meetings

Work Chronicles 16 06 2021

The post Virtual Meetings appeared first on Work Chronicles.

If you build a web application, chances are good that you’ve received user requests for dark mode support in the past couple of years. While some users may simply prefer the aesthetics of dark UI, others may find that dark mode helps ease eye strai

The post Dark Mode for HTML Form Controls appeared first on Microsoft Edge Blog.

665

Extra Ordinary 16 06 2021

Nos vamos al Congreso esLibre 2021

República Web 15 06 2021

En este episodio Andros Fenollosa y David Vaquero hablan sobre la edición del Congreso esLibre 2021, una nueva edición en formato virtual, sobre tecnologías libres enfocadas a compartir conocimiento. Esta edición contará con la participación de una charla de Andros sobre su sistema Glosa de comentarios para sitios estáticos y un taller de David sobre su contenedor Docker para Drupal.

En el episodio también se habla sobre la participación de algunas personas que han pasado por el podcast como Eduardo Collado, Sergio López, Lorenzo Carbonell, Rubén Ojeda o Jesús Amieiro. Como ha comentado David Vaquero con anterioridad, en esta edición se ha apostado por involucrar a la comunidad en español de podcasts tecnológicos, para lograr dar mayor difusión a los contenidos del Congreso.

Visita la web del podcast donde encontrarás los enlaces de interés discutidos en el episodio. Estaremos encantados de recibir vuestros comentarios y reacciones.

Nos podéis encontrar en:

- Web: republicaweb.es

- Canal Telegram: t.me/republicaweb

- Grupo Telegram Malditos Webmasters

- Twitter: @republicawebes

- Facebook: https://www.facebook.com/republicaweb

¡Contribuye a este podcast!. A través de la plataforma Buy me a coffee puedes realizar una mínima aportación desde 3€ que ayude a sostener a este podcast. Tú eliges el importe y si deseas un pago único o recurrente. ¡Muchas gracias!

Creating accessible products means most of all being aware of the usability issues your designs and code can cause. When creating new products, Microsoft follows a strict workflow of accessibility reviews of designs, code reviews and mandatory audits

The post Improving contrast in Microsoft Edge DevTools: A bugfix case study appeared first on Microsoft Edge Blog.

The Cicilline Salvo

Stratechery by Ben Thompson 15 06 2021

Measure Twice, Cut Once

MonkeyUser 15 06 2021

The Importance of Learning CSS

Josh Comeau's blog 13 06 2021

Clojure Deref (June 11, 2021)

Clojure News 11 06 2021

Welcome to the Clojure Deref! This is a periodic link/news roundup for the Clojure ecosystem. (RSS feed)

Highlights

This week Nubank announced a new $750M investment, led by $500M from Berkshire Hathaway at a $30B valuation. Nubank is the largest user of Clojure and Datomic in the world and a great example of the benefits of Clojure’s approach to managing complexity at scale.

Chris Nuernberger presented a great talk this week for London Clojurians about his work on high performance data processing with the dtype-next and tech.ml.dataset libraries.

The ClojureD conference last weekend was great with lots of interesting Clojure (and some non-Clojure) talks! Keep an eye out for videos soon.

Podcasts

We have a bumper crop of Clojure-related podcast episodes this week, put these in your ears…

-

Cognicast - Christian Romney interviews Jarrod Taylor from the Datomic team

-

Get Smarter and Make Stuff - Craig Andera interviews Michael Fogus from the Clojure core team

-

Lost in Lambduhhs - Jordan Miller interviews Alex Miller from the Clojure core team

-

ClojureScript Podcast - Jacek Schae interviews Tommi Reiman about Malli

-

defn - Vijay Kiran and Ray McDermott interview Chris Badahdah about Portal

Libraries and Databases

Some interesting library updates and posts this week:

-

Mirabelle - 0.1.0 of this stream processing tool inspired by Riemann - check out the docs and a use case

-

sicmutils - Sam Ritchie released version 0.19.0 of this math and physics based library (based on the books by Sussman and Wisdom)

-

Cybermonday - Kiran Shila releases the first release of this Clojure data interface to Markdown (like Hiccup for Markdown)

-

HoneyEQL - Tamizhvendan S introduced 0.1.0-alpha36 for EQL queries to relational databases

-

Expectations - Sean Corfield released 2.0.0-alpha2 of this clojure.test-compatible implementation of Expectations

-

Snoop - Luis Thiam-Nye announced the initial release of a library for runtime function validation using Malli

-

OSS Clojure DBs - a summary and comparison of open-source Clojure databases (but don’t forget Datomic! :)

Blogs, discussions, tutorials

-

Tetris in ClojureScript - by Shaun Lebron

-

Apache Kafka & Ziggurat - Ziggurat is an event stream processing tool written in Clojure and this article shows how to use it to consume events from Kafka

-

Why are Clojure beginners just like vegans searching for good cheese? - on Lambda Island

-

Ping CRM on Clojure - a demo of implementing Ping CRM on Clojure+ClojureScript

-

Organizing Clojure code - a discussion from Clojureverse

-

An Animated Introduction to Clojure - by Mark Mahoney

Throwback Friday (I know, I’m doing it wrong)

In this recurring segment, we harken back to a talk from an older time to a favorite talk of yore. This week, we’re featuring:

-

Clojure: Programming with Hand Tools by Tim Ewald

Is it about woodworking? Is it about Clojure? Is it about how to work? Yes.

Blacktocats turn five

The GitHub Blog 10 06 2021

Boop!

Josh Comeau's blog 10 06 2021

Let's Bring Spacer GIFs Back!

Josh Comeau's blog 10 06 2021

The Rules of Margin Collapse

Josh Comeau's blog 09 06 2021

What the heck, z-index??

Josh Comeau's blog 09 06 2021

Building a Magical 3D Button

Josh Comeau's blog 09 06 2021

How I Built My Blog

Josh Comeau's blog 09 06 2021

664

Extra Ordinary 09 06 2021

Exposed

MonkeyUser 08 06 2021

Data Platform Engineer

Brave Clojure Jobs 07 06 2021

Data Platform Engineer

Description

Analytical Flavor Systems is a venture-backed startup that models human sensory perception of flavor, aroma, and texture using proprietary machine learning in order to predict consumer preference of food and beverage products. The work we do allows our clients in the food & beverage industry to ask and answer questions about:

- their competitive landscape ("what do people like and dislike about my competitors' products?")

- optimizing existing products ("how can I make this cookie taste better?")

- novel flavor combinations for new product development ("would people like it if I combined matcha and strawberry in yogurt?")

Our data science capabilities are evolving from report generation to a data platform. That's where you come in.

The work expected of a data platform engineer at Analytical Flavor Systems covers several major areas:

- Building out a data model on Datomic to capture the information generated by our day-to-day data processing tasks in an immutable and readily queryable data store.

- Creating a data platform application layer to serve the needs of our data science team, our web console and our mobile data collection app.

- Rewriting existing data science code for execution in a distributed rather than single-machine environment.

- Maintaining and enhancing batch processing jobs to make them faster, more reliable, and more observable.

- Refactoring an existing codebase to be more modular: separating data transformations, modeling, and prediction steps into discrete functions with well-understood inputs and outputs, while testing for regressions in predictive capabilities.

Depending on your background and areas of expertise, your day-to-day work may focus more on one of these areas than others, but you should be able to keep the big picture in mind, and understand how the changes you make to one part of our system affect the whole. Your work will improve our ability to execute this code reliably, and replicate previous results. This work will also help us observe and capture the outputs of the analytical operations we perform so we get better insight into the state of the systems built atop our data science code.

You will be expected to become comfortable working in both Clojure and R, though no prior experience in R is required. This role offers you the chance to help develop the language of our research domain, which may help us identify potential new avenues of theoretical research in human sensory perception.

We are only considering candidates with USA work authorization or work visa (including OPT). AFS can sponsor H1-B renewals or transfers.

Requirements

Candidates should have at least 4 years of total programming experience, with at least 1 year of work in either a data engineering context or in building backend systems. Experience with Clojure or other functional programming languages is a plus, but not a requirement. Functional programming is as much a style and idiom of development as it is a family of languages. Candidates that have experience building modular systems that put data front and center, regardless of the implementation language, should be attracted to this role.

Candidates with experience supporting the work of researchers and data scientists are also strongly encouraged to apply. Have you made an analytical method production-ready after reading through someone else's prototype code? Are you interested in interoperability between R and Clojure? Have you helped deploy and monitor models in production? Experience with these questions gives you a good understanding of the requirements and scope of the systems we build.

The company is roughly 15 people total, so candidates will be working closely with other teams and areas of the business. Good communication skills, especially across varying levels of technical depth and skill, are preferred.

A good candidate should have experience in at least two of the following areas:

- Data science and analytics: you have enabled more powerful access to data for both technical and non-technical stakeholders. You understand how to support and enhance systems based on machine learning, and aren't afraid of diving in to build a more efficient implementation of an algorithm than one provided by a library.

- Data modeling: you have, either on your own or as part of a team, designed or extended a relational database schema to support application and business requirements.

- System design and maintenance: you know how to build and extend existing systems to make them more observable, fault-tolerant, and performant. You can ssh into a remote box to contextualize a problem that doesn't have an obvious cause.

- Automated QA and testing: you know what the invariant properties of both individual functions and system components are, and can represent those properties in code.

Benefits

- Competitive salary

- Standard benefits package (health insurance/vision/dental + 401k)

- Equity stake (Restricted Stock Units with 4-year annual vesting schedule)

- Remote-friendly (who isn't these days?). While we do plan on an eventual return to our office space in Manhattan once it's safe, immediate relocation to the NYC area is not an expectation of this role.

- That said, if you do end up in our NYC office you'll be able to join regular in-person tasting panels to get hands-on experience with the sensory data collection methods we use.

- Unlimited vacation policy

- Professional development budget

Cómo instalar Git en Centos 7

ochobitshacenunbyte 07 06 2021

Aprendemos a instalar Git en Centos 7. Aunque este programa de control de versiones ya viene en los repositorios de Centos o de Red Hat, si utilizamos la distribución del sombrero rojo, lo cierto...

La entrada Cómo instalar Git en Centos 7 se publicó primero en ochobitshacenunbyte.

Trabajar con un motor de plantillas en PHP simplifica la labor de concadenar variables en ficheros con mucho texto, como puede ser un html. Cualquier Framework que imagines incorpora un sistema similar pero podemos usarlo de manera independiente para pequeñas páginas o funcionalidades.

Primero debemos instalar Twig en su versión más reciente en la raíz del proyecto. Esto lo podemos realizar con composer.

composer require "twig/twig:^3.0"

Ahora creamos una carpeta donde almacenaremos todas las plantillas.

mkdir templates

Dentro creamos el archivo contacto.txt con el siguiente contenido.

Hola {{ nombre }},

gracias por escribirnos desde {{ email }} con el asunto "{{ asunto }}".

¡Nos vemos!

Como es un ejemplo, vamos a crear otro archivo, llamado contacto.html, con el contenido:

<h1>Hola {{ nombre }},</h1>

<p>gracias por escribirnos desde {{ email }} con el asunto "{{ asunto }}".</p>

<p>¡Nos vemos!</p>

Todas las variables entre {{ }} serán sustituidas por las variables que se definan. Si no ha quedado claro en breve lo entenderás.

En estos momentos disponemos de 2 plantillas con diferentes extensiones y formatos. No es obligatorio disponer de varias plantillas, busque aprecies que funciona de manera independiente con cualquier formato en texto plano.

Ahora creamos un archivo PHP donde ejecutaremos el código. Podemos llamarlo, por ejemplo, renderizar.php. Añadimos:

// Cargamos todas las extensiones. En este caso solo disponemos de Twig

require_once('vendor/autoload.php');

// Indicamos en Twig el lugar donde estarán las plantillas.

$loader = new \Twig\Loader\FilesystemLoader('templates');

// Cargamos las plantillas al motor de Twig

$twig = new \Twig\Environment($loader);

// Definimos las variables que deseamos rellenar en las plantillas.

$variablesEmail = [

'nombre' => 'Cid',

'email' => 'cid@campeador.vlc',

'asunto' => 'Reconquista'

];

// Renderizamos con la plantilla 'contacto.txt'

$plantillaPlana = $twig->render('contacto.txt', $variablesEmail);

// Renderizamos con la plantilla 'contacto.html'

$plantillaHTML = $twig->render('contacto.html', $variablesEmail);

Si yo hiciera un echo de cada variable podemos ver el trabajo realizado.

echo $plantillaPlana;

/**

Hola Cid,

gracias por escribirnos desde cid@campeador.vlc con el asunto "Reconquista".

¡Nos vemos!

**/

echo $plantillaHTML;

/**

<h1>Hola Cid,</h1>

<p>gracias por escribirnos desde cid@campeador.vlc con el asunto "econquista".</p>

<p>¡Nos vemos!</p>

**/

Y eso es todo.

Es realmente interesante para trabajar con emails, plantillas más complejas o en la búsqueda de renderizar un PDF. Sea cual sea tu objetivo final, disponer de un motor de plantillas en PHP hará más fácil tu trabajo.

A diferencia de otros sectores, los profesionales de las habilidades relacionadas con la tecnología, suelen contar con más opciones y oportunidades laborales. Por si fuera poco ese mercado de programadores, desarrolladores y consultores tecnológicos, opta desde hace tiempo, a trabajos en remoto y a condiciones laborales que durante la pandemia han experimentado un notable auge.

Los developers están por tanto en una especie de burbuja laboral, donde a la hora de valorar un puesto de trabajo o un cambio, existen diferentes motivaciones que merece la pena discutir.

En este episodio queremos hablar sobre los motivos que tienen los developers a la hora de afrontar cambios laborales y el punto de vista que a menudo tienen con respecto a su vida profesional. Aunque en muchas ocasiones esas motivaciones coincidan con otros perfiles profesionales, creemos muy interesante poder contar las experiencias o casos que hayamos visto o vivido.

El próximo episodio del podcast irá sobre los motivos que llevan a los «developers» a cambiar de trabajo. ¿Qué te motiva **principalmente** para valorar un cambio de trabajo?

— Podcast República Web (@republicawebes) June 4, 2021

Visita la web del podcast donde encontrarás los enlaces de interés discutidos en el episodio. Estaremos encantados de recibir vuestros comentarios y reacciones.

Nos podéis encontrar en:

- Web: republicaweb.es

- Canal Telegram: t.me/republicaweb

- Grupo Telegram Malditos Webmasters

- Twitter: @republicawebes

- Facebook: https://www.facebook.com/republicaweb

¡Contribuye a este podcast!. A través de la plataforma Buy me a coffee puedes realizar una mínima aportación desde 3€ que ayude a sostener a este podcast. Tú eliges el importe y si deseas un pago único o recurrente. ¡Muchas gracias!

Clojure Deref (June 4, 2021)

Clojure News 04 06 2021

Welcome to the Clojure Deref! This is a new periodic (thinking bi-weekly) link/news roundup for the Clojure ecosystem. We’ll be including links to Clojure articles, Clojure libraries, and when relevant, what’s happening in the Clojure core team.

Highlights

ClojureScript turns 10 this week! Happy birthday ClojureScript! :cake: We mark this from the first commit by Rich Hickey in the repo. Several thousand commits later things are still going strong and David Nolen and Mike Fikes continue to lead the project. ClojureScript recently released version 1.10.866.

The StackOverflow developer’s survey for 2021 just opened. Last year they removed Clojure from the survey because they were scared we were growing too powerful (I assume). But this year’s survey includes Clojure as an option again, so let them know you’re out there! (It also seems a lot shorter this year.)

The :clojureD Conference is just hours away! Ticket sales have ended but presumably talks will be made available afterwards. If you’re going, we’ll see you there!

Experience reports

This week we saw several interesting Clojure experience reports worth mentioning:

-

Red Planet Labs gave an overview of their codebase and some of the techniques they use pervasively - using Schema, monorepo, Specter for polymorphic data, Component, with-redefs for testing, macros and more.

-

Jakub Holý at Telia talked about the importance of interactive development with Clojure.

-

Crossbeam did a talk at Philly Tech Week about why they bet on Clojure and their experience with hiring.

-

Shivek Khurana talked about how to find a job using Clojure. There are now many companies using and hiring for Clojure, although sometimes it’s challenging to find a Clojure job that is a good match for your location and/or experience - these are some great tips!

Libraries

Some interesting library updates and posts this week:

-

Asami - Paula Gearon wrote a nice overivew of querying graph dbs

-

Joe Littlejohn at Juxt wrote an overview of the Clojure JSON ecosystem covering many popular libraries and their tradeoffs

-

odoyle-rules - Zach Oakes added a new section on defining rules dynamically

-

Reveal - Vlad wrote about viewing Vega charts in Reveal

-

Pathom - Wilker Lucio gives some updates on many features

Art

-

As always Jack Rusher has been up to making beautiful art with Clojure, in particular exploring 3D rendered attractors like the Golden Aizwa Attractor (the Clojure code) and Three-Scroll Uunified Attractor, and one made in bone. Hit his feed for lots more cool projects, often made with Clojure.

Feedback

You can find future episodes on the RSS feed for this blog. Should it be an email newsletter too?

Let us know!

Loading Weekend

MonkeyUser 04 06 2021

Passport

Stratechery by Ben Thompson 03 06 2021

Senior Software Developer

Brave Clojure Jobs 03 06 2021

Senior Software Developer

About LegalMate

Our mission is make access to justice affordable. In North America, almost $400 billion dollars is spent every year on legal services, yet 86% of of civil legal problems faced by low-income individuals receive either inadequate or no legal help at all. The legal system is intended to benefit us all, not just the top 10% of income earners.

We are bringing progressive and modern financial services to the legal world, opening the door for more people to access legal services and seek justice.

- We're VC-backed and well capitalized

- We have paying customers and exciting MoM growth

- Our founding team has an exceptional track record

- ($2.5 billion in shareholder value created in previous 3 ventures)

- Our first product is "Buy Now Pay Later" for legal services: think Affirm for lawyers

Role & Responsibilities

This is the first full-time hire on our engineering team, apart from our CTO. Consequently, candidates should expect a high degree of trust and autonomy. We intend to do great work together over the long-term, and we insist that anybody joining LegalMate at this stage is ready to grow with the company and take on more responsibility as we scale.

We are a Clojure shop and are looking to work with engineers eager to apply functional programming concepts. We are happy to train folks up on Clojure!

- Write production-grade Clojure and ClojureScript

- Review code, and provide constructive and useful feedback

- Create and collaborate on technical designs, document them

- Coach and mentor junior Clojurians in the making

- Introducing new developers to Clojure is a critical to our strategy

- Elevate the test-ability and re-usability of existing code

- Help evaluate and hire additional engineering team members (in the future)

Recommended Experience

These are provided for you to understand the relative skill and experience level of the candidates we're seeking. If you don't meet or exceed these 100%, that's OK! Please consider applying regardless.

- 5+ yrs of professional software development experience (Clojure or otherwise)

- 2+ yrs of Clojure development experience (professional or hobby)

- Previous experience working on small teams that scaled up

- You have a track record of leading successful projects

- You can give concrete examples of when you've received some hard feedback, and when you've had to deliver some hard feedback too

About You

This role is ideal for a senior developer who's comfortable with Clojure (or wants to learn), and who wants to participate in building and growing a world-class engineering team and company.

- You're more motivated to help humans than you are to solve coding puzzles

- You're comfortable with Clojure idioms (or want to learn) and functional programming concepts

- The idea of working with functional programming (Clojure) full-time is exciting and motivating

- You use data to help make decisions and inform designs

- You know how to manage your energy and time

Nice-to-have Qualities You Might Have

- You have prior experience in financial technology, or legal technology

- To you, working on legal financial tech doesn't sound boring: it sounds awesome

- You're ready to quit programming if you don't get to use functional programming in you're next position

Remote Clojure Developer

Brave Clojure Jobs 02 06 2021

Remote Clojure Developer

Do you like solving interesting problems? Are you passionate about working in one of the fastest growing product lines in the cybersecurity industry? Want a competitive salary and benefits to support a stable, high-quality life outside of work? Want to work for an organization that will assist in developing your skills, talents, help you grow? Are you someone who wants flexibility and good work/life balance? Do you love working from home?

If you enjoy working with a group of creative, talented and enthusiastic individuals on problems at the intersection of data design, transparency and interaction, then please apply so we can make the connection to the decision makers for this opening.

Required: - Bachelor’s Degree - 8+ years industry experience - 2+ years’ experience in Clojure/ClojureScript

Ideal but not Required: - Advanced UI and visualization in the browser (SVG, D3, Grammar of Graphics, Tufte, Bertin) - Systems architecture (Object orientation, patterns, service orientation, reactive, functional-relational, and back again) - Logic programming (Prolog, rule systems) - Databases, inverted indexes, message queueing (Elasticsearch, Kafka, etc.) - Provisioning and configuration management (cough, cloud)

On behalf of our Client, we are seeking 3 Remote Clojure Developers to join a team of experienced Senior Clojure developers to on their Threat Response team. They use Clojure to mesh large volumes of high dimensional network, host and service information with taxonomic information about malicious software. Much of our work involves understanding and reasoning about this data, which describes the behavior of systems, and by extension, the capabilities, and intentions of these systems’ users.

Consequently, there is a lot of room to explore and apply techniques of logic programming in a practical, useful, fascinating, expansive, and evolving problem domain. The overarching goal is to provide tools that benefit the security of their end customers’ infrastructure.

The Threat Response team is distributed across North America and Europe, working from home, key characteristics included excellent verbal and written communication skills, sociability, and pride in carrying out duties and discharging responsibilities with exuberance and alacrity. The team draws on the best of agile themes and techniques of the past 30 years—continuous integration and deployment, testing, flexible collaboration with globally distributed team members via chat and video, and gradual, deliberate optimization of process in the name of keeping projects and releases flowing smoothly, in order to provide an excellent product to customers. The team is surrounded by talented QA, UX, support, documentation writers, and management - we hold ourselves to high standards.

Today we are excited to announce improved font rendering in the latest Canary builds of Microsoft Edge on Windows. We have improved the contrast enhancement and gamma correction to match the quality and clarity of other native Windows applications. F

The post Improving font rendering in Microsoft Edge appeared first on Microsoft Edge Blog.

663

Extra Ordinary 02 06 2021

Software Developer, Full Stack

Brave Clojure Jobs 02 06 2021

Software Developer, Full Stack

About Pilloxa

Pilloxa is on a mission to improve patients' adherence to their treatment. Non-adherence to one's treatment plan is all too common. It is estimated to be the root cause of every 10th hospitalization and 8 deaths daily, in Sweden alone. Adherence to treatment is hard, and at Pilloxa we have set our minds to making it easier.

Pilloxa is a medtech company based in Stockholm, Sweden working with the latest technologies in an effort to improve the patient journey and quality of life for patients. We work together with patients, healthcare and pharmaceutical companies in bringing together all actors that have an impact on patients' treatment.

Role

You'll be an integral part of our small and flat team, working closely with the product and building a first-class user experience. The app is the centerpiece of Pilloxa's service and this is where you'll likely spend most of your time hacking in ClojureScript. As we grow you'll also likely be extending our still small Clojure backend and dabble with all parts of the stack.

Preferred experience

The more boxes you tick, the better.

- Passion for making a positive impact in peoples' lives

- 2+ years full-stack engineer

- MSc in Computer Science or equivalent

- Experience with Clojure

- Experience with React Native

- Experience with reagent/re-frame

- Startup experience

Process

- Call with CTO

- Call with Co-founder

- Technical assignment (max 8h)

- Presentation of assignment

- Call with CEO

- Reference calls

Starting with Microsoft Edge 92, users can preview the Automatic HTTPS feature, which automatically switches your connections to websites from HTTP to HTTPS.

As you browse the web, you may notice that the Microsoft Edge address bar displays a “not

The post Available for preview: Automatic HTTPS helps keep your browsing more secure appeared first on Microsoft Edge Blog.

A look at how we can save our websites from ourselves, and the stories that keep us going.

The post May 2021 Weblog: Communities Long Gone appeared first on The History of the Web.

Una buena práctica es no dejar secretos, como contraseñas o Tokens, dentro del código. Y ni mencionar el peligro que conlleva subirlo a un repositorio. En gran medida se hace hincapié en esta omisión de variables por no dejar expuesto al resto del equipo un contenido sensible. Además otorga la posibilidad de jugar con diferentes credenciales durante el desarrollo, algunas pueden ser solo para hacer pruebas y otras las que serán usadas en el proyecto final.

Muchos Frameworks ya incorporan un sistema similar al que vamos a mencionar. En breve descubrirás que la técnica es tan sencilla que puedes montártelo por tu cuenta.

Primero crea un archivo plano con el siguiente contenido. Yo lo llamaré: .env

TOKEN=123456789

USER=TUX

PASSWORD=qwe123

Son 3 futuras variables de entorno con sus valores.

A continuación ejecuta el siguiente comando en el terminal, en la misma carpeta donde se encuentra el fichero. Convertirá cada línea del fichero en una variable de entorno.

export $(cat .env | egrep -v "(^#.*|^$)" | xargs)

Si quieres comprobar que se ha ejecutado correctamente puedes hacer un echo con cualquier variable añadiendo el prefijo $.

echo $TOKEN

123456789

Tarea terminada. Recuerda que si cierras el terminal o modificas el archivo, debes volver a ejecutar el comando.

Leer variables de entorno en PHP

$token = getenv('TOKEN');

$user = getenv('USER');

$password = getenv('PASSWORD');

echo $token;

// 123456789

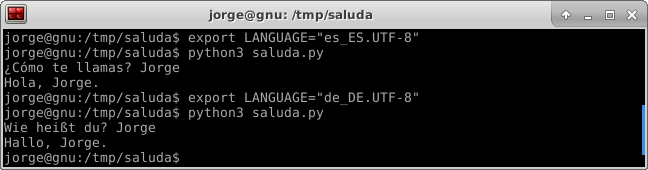

Leer variables de entorno en Python

import os

token = os.getenv('TOKEN')

user = os.getenv('USER')

password = os.getenv('PASSWORD')

Leer variables de entorno en Clojure

(def token (System/getenv "TOKEN"))

(def user (System/getenv "USER"))

(def password (System/getenv "PASSWORD"))

Aunque también puedes usar la dependencia environ.

Añade en project.clj.

[environ "0.5.0"]

Y usa con total libertad.

(require [environ.core :refer [env]])

(def token (env :TOKEN))

(def user (env :USER))

(def password (env :PASSWORD))

The Perils of Rehydration

Josh Comeau's blog 30 05 2021

La evolución del Jamstack

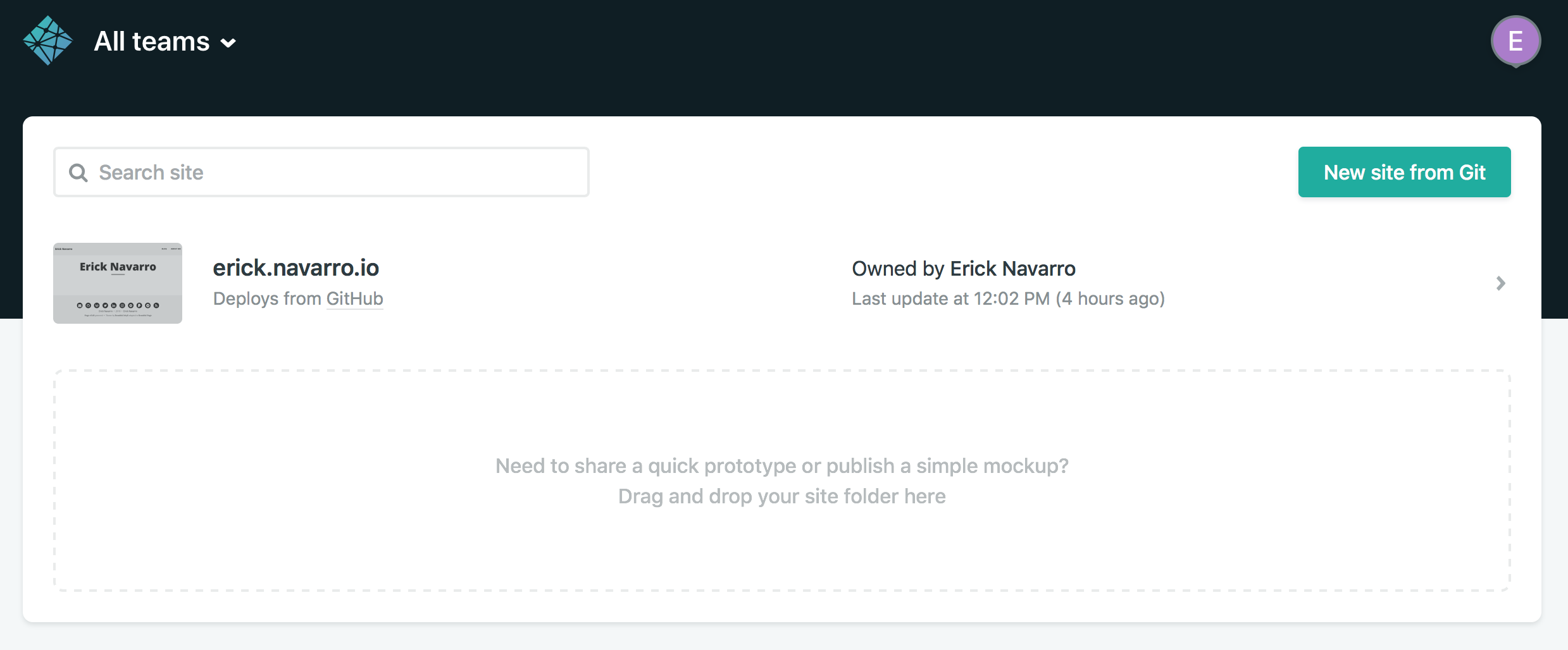

República Web 28 05 2021

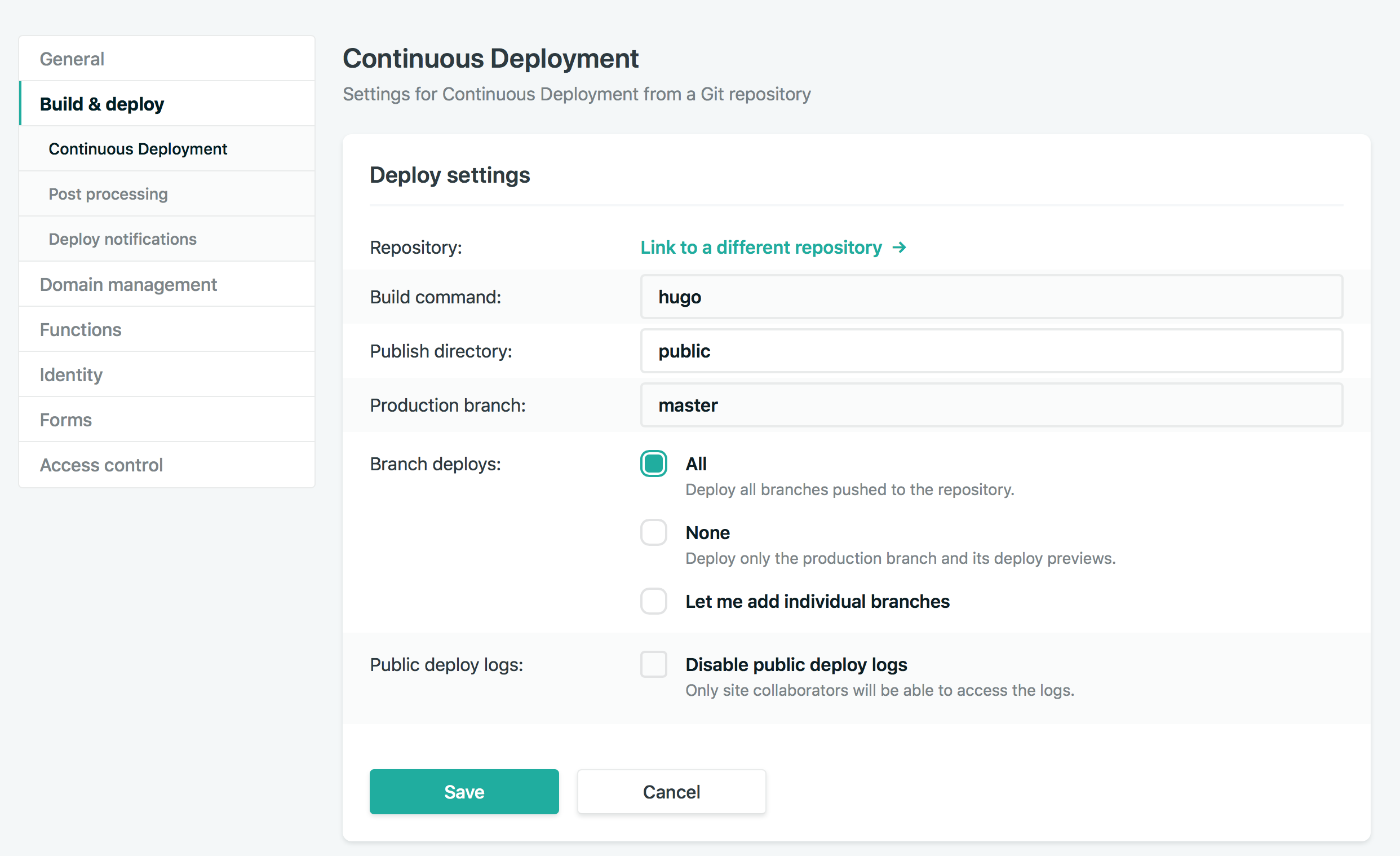

Dedicamos este episodio a comentar el artículo de Matt Biilmann publicado recientemente en Smashing Magazine titulado The evolution of Jamstack. Mathias Biilmann es CEO de Netlify y uno de los precursores del termino Jamstack. En este artículo Biilmann empieza rememorando su presentación en el 2016 durante la SmashingConf, donde daba a conocer los principios que sustentan la arquitectura Jamstack. Ahora en 2021 Matt quiere ofrecer una perspectiva sobre cómo están evolucionando las técnicas y las soluciones orientadas a esta arquitectura de Jamstack.

Empezamos el episodio hablando recordando los principios del Jamstack, como son la prioridad a que el front se construya lo antes posible y que exista un sólido desacople entre el front y el back. El segundo principio del Jamstack hace referencia a la obtención de datos bajo demanda (JavaScript y APIs).

En la segunda parte hablamos de los tres puntos que según Matt marcan la evolución del Jamstack:

- Renderizado Persistente Distribuido o DPR.

- Actualizaciones en streaming desde la capa de datos.

- Colaboración entre desarrolladores se hace popular.

Visita la web del podcast donde encontrarás los enlaces de interés discutidos en el episodio. Estaremos encantados de recibir vuestros comentarios y reacciones.

Nos podéis encontrar en:

- Web: republicaweb.es

- Canal Telegram: t.me/republicaweb

- Grupo Telegram Malditos Webmasters

- Twitter: @republicawebes

- Facebook: https://www.facebook.com/republicaweb

¡Contribuye a este podcast!. A través de la plataforma Buy me a coffee puedes realizar una mínima aportación desde 3€ que ayude a sostener a este podcast. Tú eliges el importe y si deseas un pago único o recurrente. ¡Muchas gracias!

Evercade VS Pre-Orders NOW OPEN

Evercade 28 05 2021

The Evercade VS Pre-Orders are now open! You can pre-order your Starter and Premium Packs from your preferred retailer right now. Find the link to your local retailer below, or visit the Retailers Page: AUSTRALIA PIXEL CRIB: Starter Pack – https://www.pixelcrib.com.au/collections/evercade/products/evercade-vs Premium Pack – https://www.pixelcrib.com.au/collections/evercade/products/evercade-vs-premium-pack CANADA AMAZON.CA: Starter Pack – https://www.amazon.ca/dp/B094F6GJ83 Premium Pack – https://www.amazon.ca/dp/B094F5ZGX8... View Article

The post Evercade VS Pre-Orders NOW OPEN appeared first on Evercade.

Evercade VS – What’s in the Box?

Evercade 27 05 2021